-

Stuff I might do if I had covid

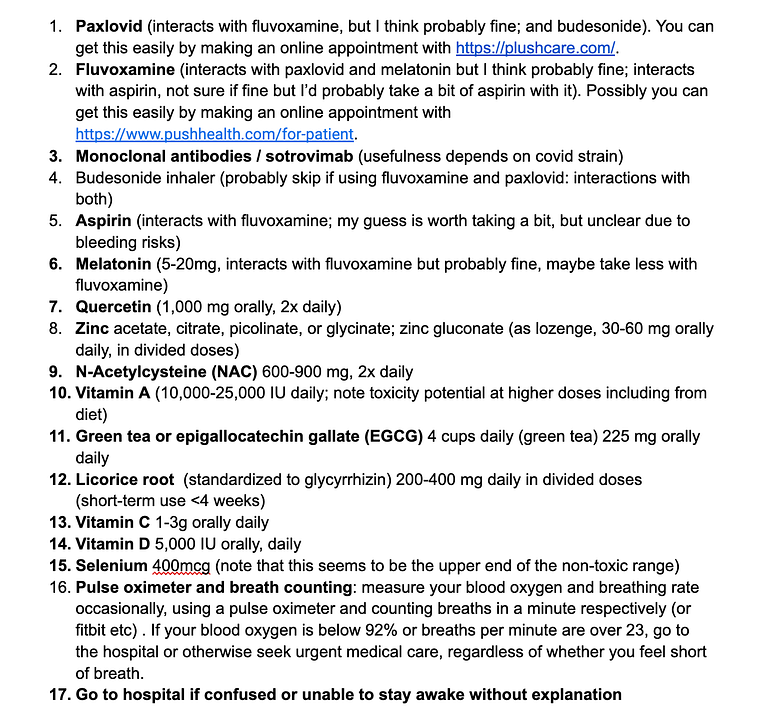

In case anyone wants a rough and likely inaccurate guide to what I might do if I had covid to mitigate it, I looked into this a bit recently and wrote notes. It’s probably better than if one’s plan was to do less than a few hours of research, but is likely flawed all over the place and wasn’t written with public sharing in mind, and um, isn’t medical advice [ETA May 11: also safety-relevant improvements are being made in the doc version, so I recommend looking at that.]:

Continue reading → -

Why do people avoid vaccination?

I’ve been fairly confused by the popularity in the US of remaining unvaccinated, in the face of seemingly a non-negligible, relatively immediate personal chance of death or intense illness. And due to the bubbliness of society, I don’t actually seem to know unvaccinated people to ask about it. So in the recent covid survey I ran, I asked people who hadn’t had covid (and thus for whom I didn’t have more pressing questions) whether they were vaccinated, and if not why not. (Note though that these people are 20-40 years old, so not at huge risk of death.)

Continue reading → -

Bernal Heights: acquisition of a bicycle

The measure of a good bicycle, according to me, is that you can’t ride it without opening your mouth in joy and occasionally exclaiming things like ‘fuck yeah bicycle’. This is an idiosyncratic spec, and I had no reason to think that it might be fulfilled by any electric bicycle—a genre I was new to—so while I intended to maybe search through every electric bicycle in The New Wheel for one that produced irrepressible, physically manifest joy, I also expected this to likely fail, and to be meanwhile embarrassingly inexplicable and irritating to bike shop employees—people who often expect one’s regard for bikes to map fairly well to facts about their price, frame shape, and whether the gears are Shimano. But several bikes in, when I uncomfortably explained to a guy there that, while the ones I had tried so far were nice, bicycles had been known to make me, like, very happy, he said of course we should find the bicycle that I loved. So I at least felt somewhat supported in my ongoing disruption of his colleague’s afternoon, with requests that bicycle after bicycle be brought out for me to pedal around the streets of Bernal Heights.

The guy would maneuver each bike out of the crowded shop and to the sidewalk, and adjust it to fit me, and we would chat, often about his suggestion that I maybe ride up to the hill on the other side of the main road. Which I would agree might be a good idea, before riding off, deciding that turning left was too hard, and heading in the other direction, through back streets and around a swooping circle park with a big ring road, where I would loop a few times if the mood took me.

Some bicycles were heavy, and rode like refrigerators. Most bicycles were unsteady, and urged even my cycling-seasoned bottom to the seat while pedaling. Most bicycles added considerable assistance to going up hills. Many bicycles seemed fine.

Continue reading → -

Positly covid survey 2: controlled productivity data

(This continues data from the survey I did the other day on Positly (some other bits here and here.)

Before asking everyone whether they had had covid, or have ongoing problems from it, or anything else, I asked them about their recent productivity relative to 2019. I was hoping to minimize the influence of their narratives about their covid situation and how it should affect their productivity on their productivity estimates.

The clearest takeaway, I think: people who would later report ongoing post-covid symptoms also reported being way less productive on average than people who didn’t report any covid.

Continue reading → -

Positly covid survey: long covid

Here are some more careful results from a survey I ran the other day on Positly, to test whether it’s trivial to find people who have had their lives seriously impacted by long covid, and to get a better sense of the distribution of what people mean by things like ‘brain fog’, in bigger, vaguer, research efforts.

Continue reading → -

Long covid: probably worth avoiding—some considerations

I hear friends reasoning, “I’ll get covid eventually and long covid probably isn’t that bad; therefore it’s not worth much to avoid it now”. Here are some things informing my sense that that’s an error:

Continue reading → -

Survey supports ‘long covid is bad’ hypothesis (very tentative)

I wanted more clues about whether really bad long covid outcomes were vanishingly rare (but concentrated a lot in my Twitter) or whether for instance a large fraction of ‘brain fogs’ reported in datasets are anything like the horrors sometimes described. So I took my questions to Positly, hoping that the set of people who would answer questions for money there was fairly random relative to covid outcomes.

I hope to write something more careful about this survey soon, especially if it is of interest, but figure the basic data is better to share sooner. This summary is not very careful, and may e.g. conflate slightly differently worded questions, or fail to exclude obviously confused answers, or slightly miscount.

This is a survey of ~230 Positly survey takers in the US, all between 20 and 40 years old. Very few of the responses I’ve looked at seem incoherent or botlike, unlike those in the survey I did around the time of the election.

Continue reading → -

Beyond fire alarms: freeing the groupstruck

Crossposted from AI Impacts

[Content warning: death in fires, death in machine apocalypse]

‘No fire alarms for AGI’

Eliezer Yudkowsky wrote that ‘there’s no fire alarm for Artificial General Intelligence’, by which I think he meant: ‘there will be no future AI development that proves that artificial general intelligence (AGI) is a problem clearly enough that the world gets common knowledge (i.e. everyone knows that everyone knows, etc) that freaking out about AGI is socially acceptable instead of embarrassing.’

He calls this kind of event a ‘fire alarm’ because he posits that this is how fire alarms work: rather than alerting you to a fire, they primarily help by making it common knowledge that it has become socially acceptable to act on the potential fire.

He supports this view with a great 1968 study by Darley and Latané, in which they found that if you pipe a white plume of ‘smoke’ through a vent into a room where participants fill out surveys, a lone participant will quickly leave to report it, whereas a group of three (innocent) participants will tend to sit by in the haze for much longer[^1].

Here’s a video of a rerun[^2] of part of this experiment, if you want to see what people look like while they try to negotiate the dual dangers of fire and social awkwardness.

Continue reading → -

Punishing the good

Should you punish people for wronging others, or for making the wrong call about wronging others?

Continue reading → -

Lafayette: empty traffic signals

Seeking to cross a road on the walk into downtown Lafayette, instead of the normal pedestrian crossing situation, we met a button with a sign, ‘Push button to turn on warning lights’. I wondered, if I pressed it, would it then be my turn to cross? Or would there just be some warning lights? What was the difference? Do traffic buttons normally do something other than change the lights?

Continue reading →

EVERYTHING — WORLDLY POSITIONS — METEUPHORIC