-

Typology of blog posts that don't always add anything clear and insightful

I used to think a good blog post should basically be a description of a novel insight.

Continue reading → -

Do incoherent entities have stronger reason to become more coherent than less?

My understanding is that various ‘coherence arguments’ exist, of the form:

- If your preferences diverged from being representable by a utility function in some way, then you would do strictly worse in some way than by having some kind of preferences that were representable by a utility function. For instance, you will lose money, for nothing.

- You have good reason not to do that / don’t do that / you should predict that reasonable creatures will stop doing that if they notice that they are doing it.

-

Holidaying and purpose

I’m on holiday. A basic issue with holidays is that it feels more satisfying and meaningful to do purposeful things, but for a thing to actually serve a purpose, it often needs to pass a higher bar than a less purposeful thing does. In particular, you often have to finish a thing and do it well in order for it to achieve its purpose. And finishing things well is generally harder and less fun than starting them, and so in other ways contrary to holidaying.

Continue reading → -

Coherence arguments imply a force for goal-directed behavior

Crossposted from AI Impacts

[Epistemic status: my current view, but I haven’t read all the stuff on this topic even in the LessWrong community, let alone more broadly.]

There is a line of thought that says that advanced AI will tend to be ‘goal-directed’—that is, consistently doing whatever makes certain favored outcomes more likely—and that this is to do with the ‘coherence arguments’. Rohin Shah, and probably others1 , have argued against this. I want to argue against them.

The old argument for coherence implying (worrisome) goal-directedness

I’d reconstruct the original argument that Rohin is arguing against as something like this (making no claim about my own beliefs here):

- ‘Whatever things you care about, you are best off assigning consistent numerical values to them and maximizing the expected sum of those values’

‘Coherence arguments’2 mean that if you don’t maximize ‘expected utility’ (EU)—that is, if you don’t make every choice in accordance with what gets the highest average score, given consistent preferability scores that you assign to all outcomes—then you will make strictly worse choices by your own lights than if you followed some alternate EU-maximizing strategy (at least in some situations, though they may not arise). For instance, you’ll be vulnerable to ‘money-pumping’—being predictably parted from your money for nothing.3 - ‘Advanced AI will tend to do better things instead of worse things, by its own lights’

Advanced AI will tend to avoid options that are predictably strictly worse by its own lights, due to being highly optimized for making good choices (by some combination of external processes that produced it, its own efforts, and the selection pressure acting on its existence). - ‘Therefore advanced AI will maximize EU, roughly’

Advanced AI will tend to be fairly coherent, at least to a level of approximation where becoming more coherent isn’t worth the cost.4 Which will probably be fairly coherent (e.g. close enough to coherent that humans can’t anticipate the inconsistencies). - ‘Maximizing EU is pretty much the same as being goal-directed’

To maximize expected utility is to pursue the goal of that which you have assigned higher utility to.5

And since the point of all this is to argue that advanced AI might be hard to deal with, note that we can get to that conclusion with:

Continue reading →-

For instance, Richard Ngo agrees here, and Eric Drexler makes a related argument here, section 6.4. ↩

-

I haven’t read all of this, and don’t yet see watertight versions of these arguments, but this is not the time I’m going to get into that. ↩

-

Assuming being ‘more coherent’ is meaningful and better than being ‘less coherent’, granting that one is not coherent, which sounds plausible, but which I haven’t got into. One argument against is that if you are incoherent at all, then it looks to me like you can logically evaluate any bundle of things at any price. Which would seem to make all incoherences identical—much like how all logical contradictions equivalently lead to every belief. However this seems unlikely to predict well how creatures behave in practice if they have an incoherent preferences. ↩

-

This isn’t quite right, since ‘goal’ suggests one outcome that is being pursued ahead of all others, whereas EU-maximizing implies that all possible outcomes have an ordering, and you care about getting higher ones in general, not just the top one above all others, but this doesn’t seem like a particularly relevant distinction here. ↩

- ‘Whatever things you care about, you are best off assigning consistent numerical values to them and maximizing the expected sum of those values’

-

Animal faces

[Epistemic status: not reflective of the forefront of human undersetanding, or human understanding after any research at all. Animal pictures with speculative questions.]

Do the facial expressions of animals mean anything like what I’m inclined to take them to mean?

Continue reading → -

Quarantine variety

Among people sheltering from covid, I think there is a common thought that being stuck in your home for a year begets a certain sameyness, that it will be nice to be done with.

It’s interesting to me to remember that big chunk of the variety that is missing in life comes from regular encounters with other people, and their mind-blowing tendencies to do and think differently to me, and jump to different conclusions, and not even know what I’m talking about when I mention the most basic of basic assumptions.

And to remember that many of those people are stuck in similar houses, similarly wishing for variety, but being somewhat tired of a whole different set of behaviors and thoughts and framings and assumptions.

Which means that the variety is not fully out of safe reach in the way that, say, a big lick-a-stranger party might be. At least some of it is just informationally inaccessible, like finding the correct answer to a hard math problem. If I could somehow spend a day living like a person stuck in their house across the street lives, I would see all kinds of new things. My home itself—especially with its connection to the internet and Amazon—is capable of vastly more variety than I typically see.

-

Why does Applied Divinity Studies think EA hasn't grown since 2015?

Applied Divinity Studies seeks to explain why the EA community hasn’t grown since 2015. The observations they initially call the EA community not having grown are:

- GiveWell money moved increased a lot in 2015, then grew only slightly since then.

- Open Phil (I guess money allocated) hasn’t increased since 2017

- Google Trends “Effective Altruism” ‘grows quickly starting in 2013, peaks in 2017, then falls back down to around 2015 levels’.

Looking at the graph they illustrate with, 1) is because GiveWell started receiving a large chunk of money from OpenPhil in 2015, and that chunk remained around the same over the years, while the money not from Open Phil has grown.

So 1) and 2) are both the observation, “Open Phil has not scaled up its money-moving in recent years”.

I’m confused about how this observation seems suggestive about the size of the EA community. Open Phil is not a community small-donations collector. You can’t even donate to Open Phil. It is mainly moving Good Ventures’ money, i.e. the money of a single couple: Dustin Moskovitz and Cari Tuna.

Continue reading → -

Sleep math: red clay blue clay

To me, going to bed often feels more like a tiresome deprivation from life than a welcome rest, or a painless detour through oblivion to morning. When I lack patience for it, I like to think about math puzzles. Other purposeful lines of thought keep me awake or lose me, but math leads me happily into a world of abstraction, from which the trip to dreamland comes naturally.

(It doesn’t always work. Once I was still awake after seemingly solving two Putnam problems, which is about as well as I did in the actual Putnam contest.)

A good puzzle for this purpose should be easy to play with in one’s head. For me, that means it should be amenable to simple visualization, and shouldn’t have the kind of description you have to look at multiple times. A handful of blobs is a great subject matter; an infinite arrangement of algebra is not.

Recently I’ve been going to sleep thinking about the following puzzle. I got several nights of agreeable sleep out of it, but now I think I have a good solution, which I’ll probably post in future.

Suppose that you have 1 kg of red clay that is 100 degrees and 1 kg of blue clay that is 0 degrees. You can divide and recombine clay freely. If two pieces of clay come into contact, temperature immediately equilibrates—if you put the 1kg of red clay next to 0.5 kg of blue clay, all the clay will immediately become 66 degrees. Other than that the temperature of the clay doesn’t change (i.e. no exchange with air or your hands, no radiation, etc.). Your goal is to end up with all of the blue clay in a single clump that is as hot as possible. How hot can you make it? (Equivalently: how cold can you make the red clay?)

HT Chelsea Voss via Paul Christiano

Continue reading → -

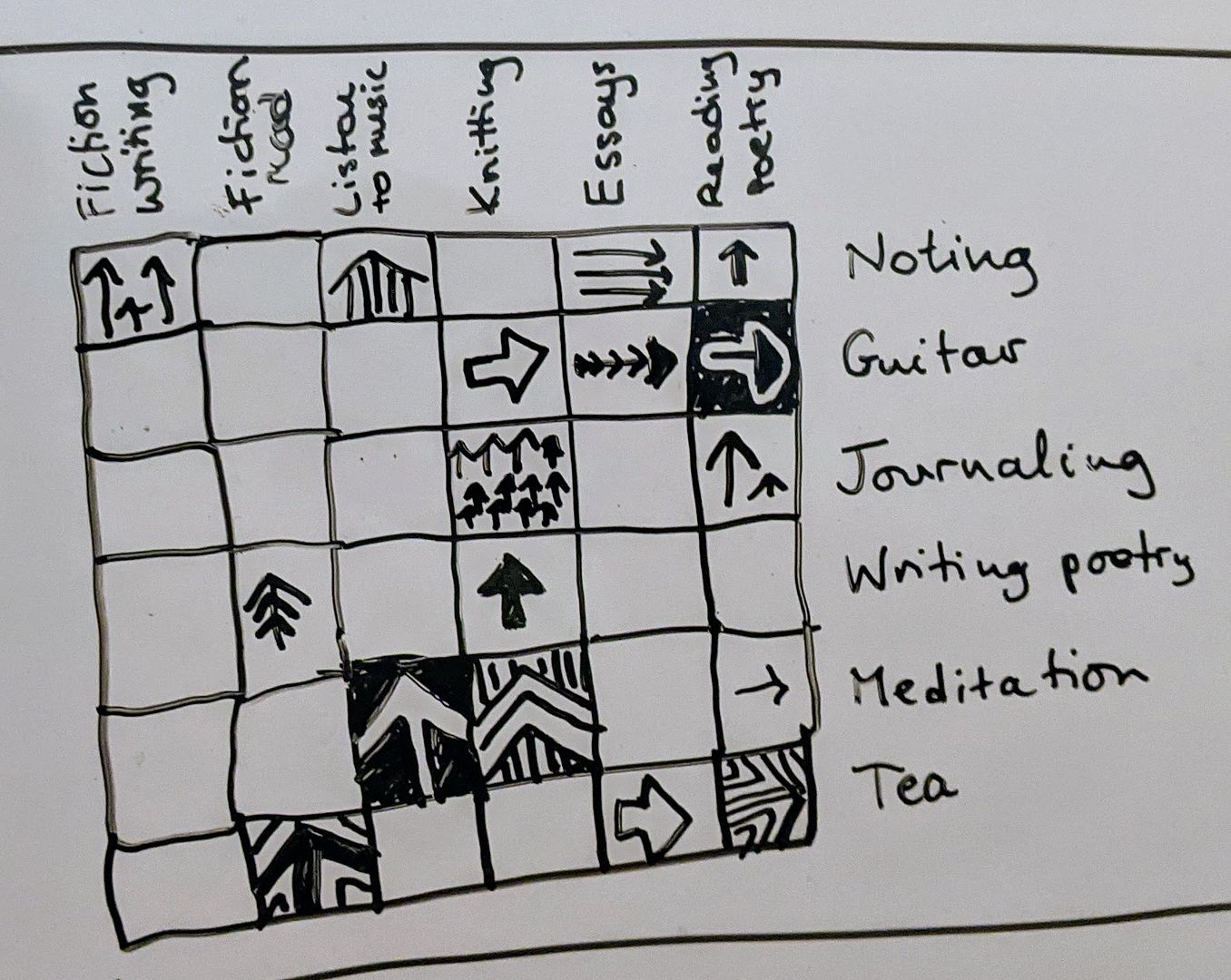

Arrow grid game

There’s something I like about having different systems all the time. Apparently.

-

Remarks on morality, shuddering, judging, friendship and the law

I lately enjoyed listening to Julia Galef and Jonathan Haidt discuss Haidt’s theorized palate of ‘moral foundations’—basic flavors of moral motivation—and how Julia should understand the ones that she doesn’t naturally feel.

I was interested in Julia’s question of whether she was just using different words to those who for instance would say that incest or consensual cannibalism are ‘morally wrong’.

She explained that her earlier guest, Michael Sandel, had asked whether she didn’t ‘cringe’ at the thought of consensual cannibalism, as if he thought that was equivalent to finding it immoral. Julia thought she could personally cringe without morally condemning a thing. She had read Megan McArdle similarly observing that ‘liberals’ claim that incest is moral, but meanwhile wouldn’t befriend someone who practices it, so do in fact morally object after all

Continue reading →

EVERYTHING — WORLDLY POSITIONS — METEUPHORIC