-

'Wicked': thoughts

I watched Wicked (the 2024 movie) with my ex and his family at Christmas. My current stance is that it was pretty fun but not especially incredible or deep. I could be pretty wrong—watching movies isn’t my strong suit, but I do like chatting about them afterwards. Some thoughts:

- Glinda is initially too shallow and awful to be taken seriously as a character, so it’s hard to care about her. It felt like she changed too much too fast as a result of Elphaba being good to her, especially in the direction of being not a throwaway idiot played for laughs.

- Elphaba feels too much set up by the world to be a sympathetic victim-hero—like, she has an unimportant flaw and then everyone everywhere despises her for it, and at the same time they are committing great evil and she is the only one who can see this. It feels a bit like a self-aggrandizing pity fantasy of a child (‘once upon a time there was a little girl called [my name] and everyone was mean to her for no reason because they were bad and also they hurt animals and she was the only person who cared, and also she really had strong magical powers that nobody else had…’).

Given her situation of being aggressively mistreated and perceiving great ills in the world around her, I don’t know that she behaves particularly relatably, admirably or interestingly for most of the movie. She is defensive and incurious and doesn’t seem to have much going on until the most blatant wrong falls into her lap. (And this wrong is even being committed against one of her only friends, making acting on it particularly socially easy and called-for by basic decency.) So I guess her character also seems hard to be invested in. I’m not sure what makes a sympathetic victim-hero feel like a pure incarnation of a moving archetype versus an on-the-nose trope review, but for me it landed a bit too much as the latter. - On the other hand, Elphaba’s singing is really something. Though her solo songs mostly didn’t do that much for me. I did enjoy the acknowledgement of the human tendency to have dumb fantasies about being respected by people you don’t know in “The Wizard and I”. In non-solo songs, I had fun with “Popular”, “Dancing through life”, and “What is this feeling?” Though Elphaba’s description of Glinda as simply ‘blonde’, is contrary to the narrative that she is above taking appearances to define people, so I don’t get that bit. About five other songs did little for me or I don’t remember. I wonder if some people like them, or if people making musicals have some other aim in including all the meh songs.

- “Defying Gravity” seems great, though still most of the time doesn’t quite do it for me. One thing about it is that it is powerfully living out an implicit fantasy that is probably common in the abstract—you are constrained and being told to do things you don’t want and there are more powerful forces closing in and about to wrest what little control you have, and what if suddenly you could just fly up and be stronger than anyone and have all the power and control? What if just believing in yourself would unleash this? (It doesn’t usually work, but worth a try, and a great soundtrack for trying!)

- One response I initially had to Wicked was ‘wow this male lead asks for the reworking of my male charisma scale to go up to at least fifteen—how is this guy not famous? A damning blow to the efficient Hollywood hypothesis?’ However on investigation, not only is he famous, but he starred in an entire season of Bridgerton that I watched. So, new mystery: Jonathan Bailey in Bridgerton did not ask me to recalibrate my understanding of charisma. Did he get more charismatic? Does the writing do a lot? If I contacted him, would he tell me? (Ok, his Wikipedia page says he was described as “unbelievably charismatic” in 2016, so that’s reassuring on some front. But now two mysteries—why so different at different times, and why do so many movies hire other actors?)

- If you enjoy the song “Dancing through Life” without thinking much, it might encourage you to have a carefree attitude, per its content. Yet this message is brought to you by people who have put incredible amount of care and conscientiousness into dance training, choreography, songwriting and so on. So if you like the creation, you should actually consider liking non-carefree attitudes more. (This behind-the-scenes video strongly confirms: dancing through giant rotating ladder-rooms is not for the spontaneous.)

- Imagine you are alone in a dangerous world, but there is someone good and powerful on your side out there, who helps you and directs you and warms your heart in your solitary struggles. Then having at last fought your way to their side, you see it was all a fiction and you are looking into the eyes of evil. This can be a vivid and interesting moment! Wicked contains an instance of this narrative, and I feel like that aspect of it didn’t have the emotional punch that is possible.

I read a fantasy book with this plot once, where it turned out that the good and wise king—the protagonist’s main distant ally in a frightening world—really just had magic that let him commit murder then have eyewitnesses believe his words claiming innocence. So all word about him was good, but at his side you realized the truth, maybe only for a moment between murder and amnesia! That was a plot twist that stuck with me. Maybe Wicked wasn’t meant to get a big punch from that, or maybe it did for others—but it was a key element of the story, and for me this part kind of rolled by without moving me.

-

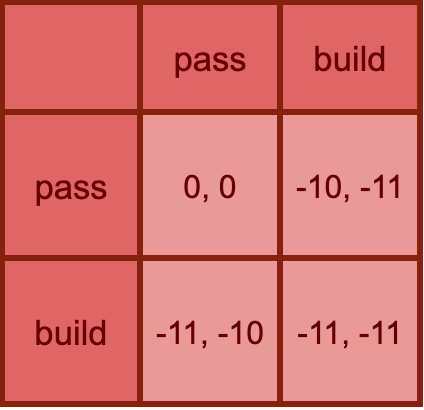

Association taxes are collusion subsidies

Under present norms, if Alice associates with Bob, and Bob is considered objectionable in some way, Alice can be blamed for her association, even if there is no sign she was complicit in Bob’s sin.

An interesting upshot is that as soon as you become visibly involved with someone, you are slightly invested in their social standing—when their social stock price rises and falls, yours also wavers.

And if you are automatically bought into every person you notably interact with, this changes your payoffs. You have reason to forward the social success of those you see, and to suppress their public scrutiny.

And so the social world is flooded with mild pressure toward collusion at the expense of the public. By the time I’m near enough to Bob’s side to see his sins, I am a shareholder in their not being mentioned.

And so the people best positioned for calling out vice are auto-bought into it on the way there. Even though the very point of this practice of guilt-by-association seems to be to empower the calling-out of vice—raining punishment on not just the offender but those who wouldn’t shun them. This might be overall worth it (including for reasons not mentioned in this simple model), but it seems worth noticing this countervailing effect.

Prediction: If consortment was less endorsement—if it were commonplace to spend time with your enemies—then it would be more commonplace to publicly report small wrongs.

-

Mental software updates

Brains are like computers in that the hardware can do all kinds of stuff in principle, but each one tends to run through some particular patterns of activity repeatedly. For computers you can change this by changing programs. What are big ways brain ‘software’ changes?

Some I can think of:

- Intentional practice of different styles of thinking (e.g. meditation)

- Intentional practice of different trains of thought in response to specific stimuli (e.g. CBT, self-talk)

- Changing the high level situation, where your brain automatically has different patterns for each (e.g. if you go from feeling like a child to like an adult maybe a lot of patterns change)

- A change in a major explicit belief (e.g. if you go from expecting your project to work out to believing otherwise, your patterns of attention might naturally change)

- Learning that the world isn’t as you intuited (e.g. if you are constantly worrying about people wronging you, but everyone is kind to you, this worry might become unappealing)

- Intense experiences causing inaccurate updating (e.g. trauma)

- Identifying differently (e.g. if I think of myself as a good student, I might have different mental patterns around studying than when I thought of myself as a bad student)

- Adopting a new goal (e.g. deciding to be a musician)

- Getting a new responsibility (e.g. a child)

- Getting a new obsession (e.g. a crush, a hobby)

- Changing social groups (e.g. among jokers it is more tempting to think of jokes, though in my experience among philosophers it might have been less tempting to think of philosophy)

- Interacting with a really compelling person

- Drugs (e.g. alcohol, adderall, LSD both short term and long-term)

- Religion, somehow

I feel like people talk about many of these as important, but not in one view. I rarely hear someone say, “My brain software seems suboptimal, what are my options for changing it?”, then go down the list. Instead I suppose one hears from a friend that this book helped them, or one decides to have a therapist, and the book or therapist turns out to be one that focuses on CBT, so one does that. Or one changes social groups or responsibilities for some other reason, then remarks that this was a good or bad side-effect. Maybe that makes sense—‘stuff my brain habitually does’ is pretty broad.

I’d be interested to know which things do in practice change people’s mental patterns the most. -

Winning the power to lose

Have the Accelerationists won?

Last November Kevin Roose announced that those in favor of going fast on AI had now won against those favoring caution, with the reinstatement of Sam Altman at OpenAI. Let’s ignore whether Kevin’s was a good description of the world, and deal with a more basic question: if it were so—i.e. if Team Acceleration would control the acceleration from here on out—what kind of win was it they won?

It seems to me that they would have probably won in the same sense that your dog has won if she escapes onto the road. She won the power contest with you and is probably feeling good at this moment, but if she does actually like being alive, and just has different ideas about how safe the road is, or wasn’t focused on anything so abstract as that, then whether she ultimately wins or loses depends on who’s factually right about the road.

In disagreements where both sides want the same outcome, and disagree on what’s going to happen, then either side might win a tussle over the steering wheel, but all must win or lose the real game together. The real game is played against reality.

Another vivid image of this dynamic in my mind: when I was about twelve and being driven home from a family holiday, my little brother kept taking his seatbelt off beside me, and I kept putting it on again. This was annoying for both of us, and we probably each felt like we were righteously winning each time we were in the lead. That lead was mine at the moment that our car was substantially shortened by an oncoming van. My brother lost the contest for power, but he won the real game—he stayed in his seat and is now a healthy adult with his own presumably miscalibratedly power-hungry child. We both won the real game.

(These things are complicated by probability. I didn’t think we would be in a crash, just that it was likely enough to be worth wearing a seatbelt. I don’t think AI will definitely destroy humanity, just that it is likely enough to proceed with caution.)

When everyone wins or loses together in the real game, it is in all of our interests if whoever is making choices is more factually right about the situation. So if someone grabs the steering wheel and you know nothing about who is correct, it’s anyone’s guess whether this is good news even for the party who grabbed it. It looks like a win for them, but it is as likely as not a loss if we look at the real outcomes rather than immediate power.

This is not a general point about all power contests—most are not like this: they really are about opposing sides getting more of what they want at one another’s expense. But with AI risk, the stakes put most of us on the same side: we all benefit from a great future, and we all benefit from not being dead. If AI is scuttled over no real risk, that will be a loss for concerned and unconcerned alike. And similarly but worse if AI ends humanity—the ‘winning’ side won’t be any better off than the ‘losing side’. This is infighting on the same team over what strategy gets us there best. There is a real empirical answer. Whichever side is further from that answer is kicking own goals every time they get power.

Luckily I don’t think the Accelerationists have won control of the wheel, which in my opinion improves their chances of winning the future!

-

Ten arguments that AI is an existential risk

You can read Ten arguments that AI is an existential risk by Nathan Young and I at the AI Impacts Blog.

-

Secondary forces of debt

A general thing I hadn’t noticed about debts until lately:

- Whenever Bob owes Alice, then Alice has reason to look after Bob, to the extent that increases the chance he satisfies the debt.

- Yet at the same time, Bob has an incentive for Alice to disappear, insofar as it would relieve him.

These might be tiny incentives, and not overwhelm for instance Bob’s many reasons for not wanting Alice to disappear.

But the bigger the owing, the more relevant the incentives. When big enough, the former comes up as entities being “too big to fail”, and potentially rescued from destruction by those who would like them to repay or provide something expected of them in future. But the opposite must exist also: too big to succeed—where the abundance owed to you is so off-putting to provide that those responsible for it would rather disempower you.

And if both kinds of incentive are around in whisps whenever there is a debt, surely they often get big enough to matter, even before they become the main game.

For instance, if everyone around owes you a bit of money, I doubt anyone will murder you over it. But I wouldn’t be surprised if it motivated a bit more political disempowerment for you on the margin.

There is a lot of owing that doesn’t arise from formal debt, where these things also apply. If we both agree that I—as your friend—am obliged to help you get to the airport, you may hope that I have energy and fuel and am in a good mood. Whereas I may (regretfully) be relieved when your flight is canceled.

Money is an IOU from society for some stuff later, so having money is another kind of being owed. Perhaps this is part of the common resentment of wealth.

I tentatively take this as reason to avoid debt in all its forms more: it’s not clear that the incentives of alliance in one direction make up for the trouble of the incentives for enmity in the other. And especially so when they are considered together—if you are going to become more aligned with someone, better it be someone who is not simultaneously becoming misaligned with you. Even if such incentives never change your behavior, every person you are obligated to help for an hour on their project is a person for whom you might feel a dash of relief if their project falls apart. And that is not fun to have sitting around in relationships.

(Inpsired by reading The Debtor’s Revolt by Ben Hoffman lately, which may explicitly say this, but it’s hard to be sure because I didn’t follow it very well. Also perhaps inspired by a recent murder mystery spree, in which my intuitions have absorbed the heuristic that having something owed to you is a solid way to get murdered.)

-

Podcasts: AGI Show, Consistently Candid, London Futurists

For those of you who enjoy learning things via listening in on numerous slightly different conversations about them, and who also want to learn more about this AI survey I led, three more podcasts on the topic, and also other topics:

- The AGI Show: audio, video (other topics include: my own thoughts about the future of AI and my path into AI forecasting)

- Consistently Candid: audio (other topics include: whether we should slow down AI progress, the best arguments for and against existential risk from AI, parsing the online AI safety debate)

- London Futurists: audio (other topics include: are we in an arms race? Why is my blog called that?)

-

What if a tech company forced you to move to NYC?

It’s interesting to me how chill people sometimes are about the non-extinction future AI scenarios. Like, there seem to be opinions around along the lines of “pshaw, it might ruin your little sources of ‘meaning’, Luddite, but we have always had change and as long as the machines are pretty near the mark on rewiring your brain it will make everything amazing”. Yet I would bet that even that person, if faced instead with a policy that was going to forcibly relocate them to New York City, would be quite indignant, and want a lot of guarantees about the preservation of various very specific things they care about in life, and not be just like “oh sure, NYC has higher GDP/capita than my current city, sounds good”.

I read this as a lack of engaging with the situation as real. But possibly my sense that a non-negligible number of people have this flavor of position is wrong.

-

Podcast: Center for AI Policy, on AI risk and listening to AI researchers

I was on the Center for AI Policy Podcast. We talked about topics around the 2023 Expert Survey on Progress in AI, including why I think AI is an existential risk, and how much to listen to AI researchers on the subject. Full transcript at the link.

-

Is suffering like shit?

People seem to find suffering deep. Serious writings explore the experiences of all manner of misfortunes, and the nuances of trauma and torment involved. It’s hard to write an essay about a really good holiday that seems as profound as an essay about a really unjust abuse. A dark past can be plumbed for all manner of meaning, whereas a slew of happy years is boring and empty, unless perhaps they are too happy and suggest something dark below the surface. (More thoughts in the vicinity of this here.)

I wonder if one day suffering will be so avoidable that the myriad hurts of present-day existence will seem to future people like the problem of excrement getting on everything. Presumably a real issue in 1100 AD, but now irrelevant, unrelatable, decidedly not fascinating or in need of deep analysis.

-

Twin Peaks: under the air

Content warning: low content

~ Feb 2021

The other day I decided to try imbibing work-relevant blog posts via AI-generated recital, while scaling the Twin Peaks—large hills near my house in San Francisco, of the sort that one lives near and doesn’t get around to going to. It was pretty strange, all around.

For one thing, I was wearing sunglasses. I realize this is a thing people do all the time. Maybe it’s strange for them too, or maybe theirs aren’t orange. Mine were, which really changed the situation. For one thing, the glowing streetscapes felt unreal, like cheap science fiction. But also, all kinds of beauty seemed to want photographing, but couldn’t be seen with my camera. It was funny to realize that I’m surrounded by potential beauty all the time, that I would see if I had different eyes, or different glasses, or different sensory organs all together. Like, the potential for beauty is as real as the beauty I do see. (This is perhaps obvious, but something being obvious doesn’t mean you know it. And knowing something doesn’t mean you realize it. I’d say I knew it, but hadn’t realized it.)

And then my ears were cornered in by these plugs spouting electronic declarations on the nature of coherent agents and such, which added to my sense of my head just not really being in the world, and instead being in a cozy little head cockpit, from which I could look out on the glowing alien landscape.

My feet were also strange, but in the opposite direction. I recently got these new sock-shoes and I was trying them out for the first time. They are like well-fitting socks with strong but pliable rubber stuff sprayed on the bottom. Wearing them, you can feel the ground under your feet, as if you were bare-foot. Minus the sharp bits actually lacerating your feet, or the squishy bits sullying them. Walking along I imagined my freed feet were extra hands, holding the ground.

I had only been up to Twin Peaks twice before, and I guess I had missed somehow exactly how crazy the view was. It was like standing on a giant breast, with a city-sea-bridge-forest-scape panoramaed around and under you over-realistically. The bridge disappeared into mystical mists and the supertankers swam epically on the vast blue expanse. I tried to photograph it multiple times but failed, partly because my camera couldn’t capture the warm orange tinge of the sea and the bridge rising from the burning mists, and partly for whatever reason that things sometimes look very different in photographs, and partly because I am always vaguely embarrassed photographing things with people looking at me, and there was a steady smattering of them.

The roads had been blocked off to traffic during the pandemic. From a car I don’t realize what vast plateaus winding hillside roads are. For us pedestrians, these were like concert stages.

The people I saw on my way up were either flying down the swooping roads on bikes and skateboards, in a fashion that made me involuntarily rehearse what I would do when they fell off, or flying unrealistically up the swooping roads on bikes, in a fashion that made me appreciate how good the best electric bikes must be now. I noticed as I watched one speed above me in awe that he flew the brand of his bourgeoisie bicycle on the back of his shirt, and wondered if he was just paid by them to ride up and down here all day, in the hope that someone would be so impressed that they would jot down the t-shirt label as the only clue to the rapidly disappearing bike’s identity, then google it later.

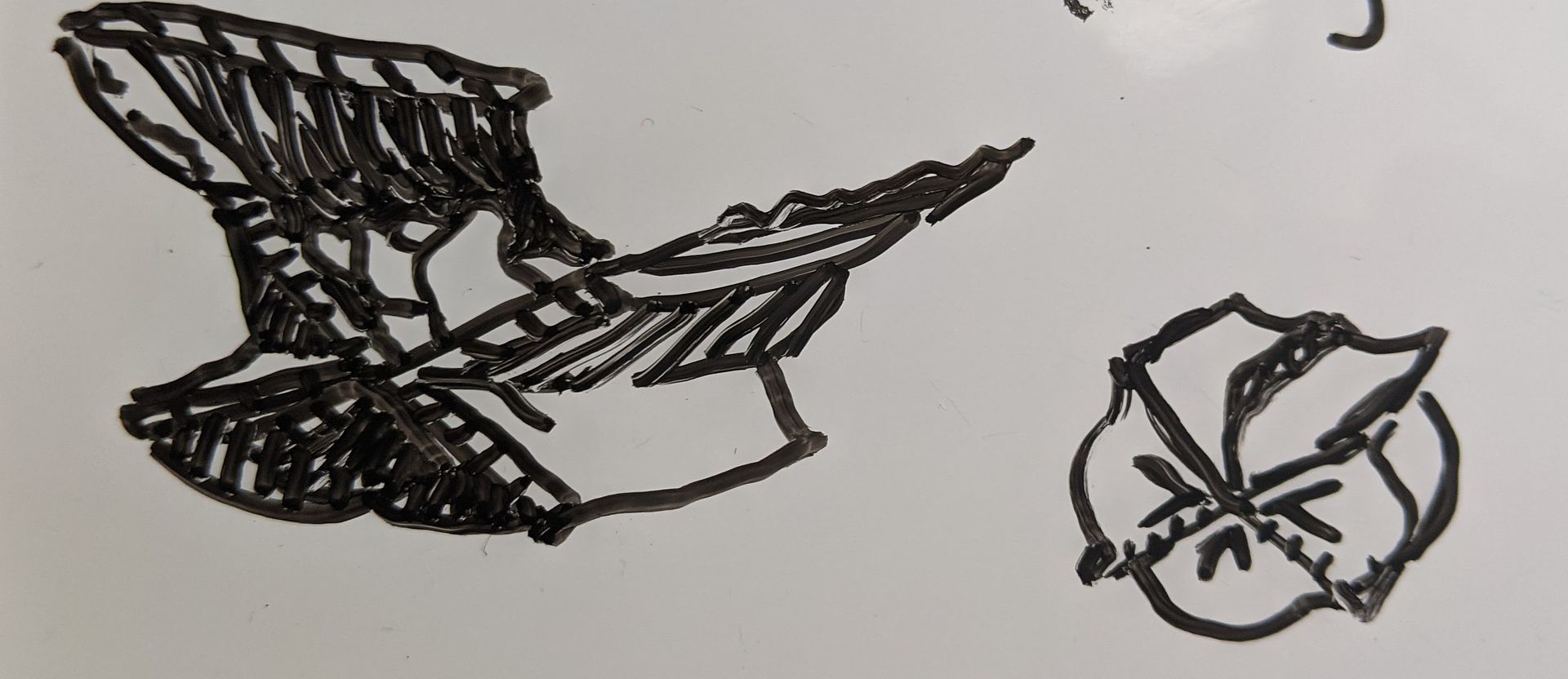

I wandered atop the peaks, and confusingly collected a mob of crows flying above, apparently interested in me specifically. This was reasonably sinister, and in Australia birds can attack you, so I investigated on my phone, while walking hesitantly below the circling birds. At last they descended and alit on the road and guardrail around me, and stood looking at me.

This picture captures the bizarreness of the situation about as badly as it captures the awesomeness of the scenery. It’s rare to be so much the center of a social situation with so little notion of what is expected of you or the meaning of it.

I think things then just kind of dissipated and I made efficiently for home.

-

What is my Facebook feed about?

Sometimes I look at social media a bunch, but it would be hard for me to tell you what the actual content is, I suppose because whenever I’m looking at it, I’m focused on each object thing level in turn, not the big picture. So sometimes I’m curious what it is I read about there. Here is the answer for Facebook, in 2019—according to a list I found that appears to be a survey of such—and again now. Plausibly not at a useful level of abstraction, but I have a bit of a migraine and no more energy for this project.

November 11 2019

- Question about other people’s word usage

- Question about other people’s inferences from word usage

- Illustrated sex joke

- Encouragement and instructions for opening up communication with attractive strangers in public places

- Cute kid quote

- Historic anti women’s suffrage leaflet

- Cute kid quote and question about word usage

- Recommendation and anecdote for Roam

- Humorous anecdotal request for computer security problems

- Joke I don’t get about Jesus with lots of emoticons

- Sokal affair

- Advice on surviving bushfires

- Feminist writer screenshots and describes random online abuse from man

- Sharing of personal health data

- Science says a thing about dinosaurs and space

- Tax policy trolling

- Saudi spies at twitter news

- Sexual/biological facts

- Anecdote about medical system

- Ethics injoke

- Current reading list

- Travel photos

- Anecdote about australian bushfires with tenderness

- Long letter about policy goings on within medical system

- Request for acronym unknown to me

- Funny law

- Science about biology, cryonics

- Question about word usage

- Politics opinion on events

- Futuristic anime style politics cartoon

Notable patterns:

Questions about word usage: 4

Kid related: 2

May 15, 2024

(Before checking, my sense is that the rate of posts about children and getting married is way up.)

- AI company politics commentary

- Job and city change

- Invitation to help make game

- Description of experience of making music

- Book launch, project launch, new house

- Cryptic fertility life update

- Social commentary on language, gender and wokeness

- Old photo of two famous men

- Old photo of author winning an award

- Event ad

- Death of father

- Photo of OP kissing

- New job

- Update on losing job

- Wedding planning views

- Book launch

- Social commentary around political ideologies

- Death of dog

- Questioning claim about changes in breathing rate over history

- Take on home buying

- AI lab politics

- Photo of partner on trip

- Photo of self at work

- Own photo of bird

- Commentary on culture of judgment and author’s parents’ behavior

- Remembering child relative who died

- General self-help style commentary on human behavior

- Photo of dogs

- Mothers’ day and Aurora photos

- Invitation to help make game

Some notable patterns:

Kid related: 0-3 (maybe down from 2)

Marriage related: 1

—> I’m pretty wrong about the density of children and marriage related posts

Job/book updates: 5 (up from 0)

Smaller projects: 5 (up from 2-4)

—> Actually a lot of project related posts

Humor: ~0 (down from at least 5)

Word usage: 1 (down from at least 4)

—> Some classic sources of entertainment are way down (or we see random noise)

-

Things I hate about Partiful

-

The aesthetic for all parties is basically the same.

-

That aesthetic is bad.

-

A party is an aesthetic creation, so having all guests’ first experience of the thing you are offering them be a chintzy piece of crap that matches every other chintzy piece of crap is much worse than if the thing they were selling was like low-quality toilet paper or something.

-

As far as I can tell, the only way to be informed of parties using Partiful is via SMS. Perhaps this is idiosyncratic to me, but I have no desire to ever use SMS. I also don’t want to receive a message in the middle of whatever I’m doing to hear about a new party happening. Fuck off. This should only happen if the party is very time sensitive and important. Like if a best friend or much sought after celebrity is having a party in the next twenty minutes, sure text me, if you don’t have WhatsApp. Otherwise, ffs email me.

-

As far as I can tell, the only way to message the host a question about the party is to post it to the entire group. Yet there are very few questions I want to text an entire guest list about.

-

Supposing I make the error of doing that (which I do not), as far as I can tell, the guest list receives an sms saying that I have sent a message, and they have to click to follow a link to the website to see what the message is.

-

Supposing I am considering posting such a message to the entire group, Partiful will instruct me to ‘Write something fun!’ Fuck off. I’ll decide what to write, and don’t need the condescending needling. Relatedly, if I debase myself and host something on Partiful, while I’m drafting the invitation, it has been known to describe me as ‘the wonderful [host]’. I don’t want your narrativizing. a) Maybe I’m not wonderful. You don’t know shit about me. b) I actually can’t tell if you are going to seriously write that when I post the event, so I need to investigate how to mitigate that possibility.

-

When I’m invited to a party, things I’m not allowed to know until after I RSVP include a) who else is invited or going, and b) where it is. Like, what do you want me to decide about social events based on? Is this communism? It also feels like such officious withholding. Like, are you serious? You’re inviting me to hang out with people and you aren’t going to tell me which people until I say I’ll come? Are you on a power trip? Who is even on the power trip? The creators of Partiful on behalf of the hosts? “No, stand your ground, you’re a party creator now, don’t give up your advantage—they’ll give in and give in and RSVP eventually”.

-

So naturally I go through the busywork of RSVPing “maybe” so I can see key details of the event. Which is annoying. But also it constitutes me saying to my friend “maybe I’ll come to your party”, which is a slightly shitty thing to say to an actual person I’m friends with, if for instance I think it’s pretty unlikely I’ll come to their party and merely want to check if this is the rare party that I do want to go to. Furthermore, now I’ve made it clear (to an as-yet unknown set of people who RSVP’d) that I’ve seen the party and am explicitly and concretely probably-rejecting it. I’m in a whole public social interaction with it. Whereas I might have liked to examine the party without engaging, leaving my knowledge of it and position on it ambiguous.

-

Ok, so three steps in: I have moved from a contentless text message to a website and RSVP’d maybe and can at last see key details. At that point Partiful pops another notification into my phone telling me that I RSVP’d maybe. Why? I know I RSVP’d maybe, because I was the person who did it, and it was three seconds ago. If later I don’t know and want to know, I’ll actually check in the event invitation, not the interminable list of similar looking messages from Partiful. And I was actually already looking at the event invitation until you distracted me with my phone. And if occasionally I err—thinking I RSVP’d maybe but really having RSVP’d a different thing, say—then this isn’t a fucking space expedition; things will be okay.

-

Then probably I just forget about it and leave it as a maybe, because I have other things to do in life, and this has already gone on for way too long, and I have technically RSVP’d, and who knows if I’ll go to a thing. So that’s annoying for the host, if they might have liked a real RSVP.

-

But if I do try to RSVP more specifically, my options are “I’m going” and “Can’t go”. So in the situation that arises nearly every time—I can go but I don’t want to—Partiful has decided I’m going to just tell a little white lie to the host? Or just that I should go unless I can’t? It’s true that declining events is a difficult issue, and for many people white lies are the way out. But that’s because it’s too awkward to say “I don’t want to”. But if there are only two messages you can send—basically ‘yes’ and basically ‘no’—selected by a company, it’s not actually awkward to choose the ‘no’ one, because it doesn’t distinguish not wanting to and not being able to. There’s no reason for Partiful to put a lie in your mouth there. It’s true that it’s also not that bad to say a falsehood, given that you only have two options, and are clearly most of the time going to want to say a thing you haven’t been given the option to say. But why add this note of false smarm? Like, Partiful could make the options “yes” and “no because my mother is in hospital”, and I wouldn’t hold it against people if they clicked the latter, but I would hold it against the maker of the options.

-

If I decide to tell my friend I can’t go to their party, Partiful will message me on my phone again, with a crying face, saying sorry I can’t go to the party. I don’t need this shit. I don’t want your emotional involvement in my decisions about when to socialize, corporation. True, it’s only fake emotional involvement, but I don’t want to be bathed in the fake emotions of corporations either. I’m a social animal, this stuff does change how I feel, change my sense of the sea of minds I feel I’m surrounded by, the emotions of the world. At a basic level, it’s hard to say no to things, and yet I have to: the alternative is to waste my life being overwhelmed and not even able to make progress on the spew of second-rate things I sleepwalk into saying yes to. And being pinged with pictures of crying when I judge that something isn’t the right thing for me to go to, and say no doesn’t help me. Does it help the host? Do they want to manipulate me? Probably not. Is Partiful’s hope that I learn to go to Partiful parties a little bit more if I feel micro-guilty and micro-sad when I decline them, and then the hosts have the sense that people come to Partiful parties more, so the company benefits? I doubt it, I guess the makers just lack much sense of what a good social world could look like, and are thoughtlessly enacting trumped up emotion where available, in the hope that trumped up emotion gets attention, and attention gets success.

-

-

An explanation of evil in an organized world

A classic problem with Christianity is the so-called ‘problem of evil’—that friction between the hypothesis that the world’s creator is arbitrarily good and powerful, and a large fraction of actual observations of the world.

Coming up with solutions to the problem of evil is a compelling endeavor if you are really rooting for a particular bottom line re Christianity, or I guess if you enjoy making up faux-valid arguments for wrong conclusions. At any rate, I think about this more than you might guess.

And I think I’ve solved it!

Or at least, I thought of a new solution which seems better than the others I’ve heard. (Though I mostly haven’t heard them since high school.)

The world (much like anything) has different levels of organization. People are made of cells; cells are made of molecules; molecules are made of atoms; atoms are made of subatomic particles, for instance.

You can’t actually make a person (of the usual kind) without including atoms, and you can’t make a whole bunch of atoms in a particular structure without having made a person. These are logical facts, just like you can’t draw a triangle without drawing corners, and you can’t draw three corners connected by three lines without drawing a triangle. In particular, even God can’t. (This is already established I think—for instance, I think it is agreed that God cannot make a rock so big that God cannot lift it, and that this is not a threat to God’s omnipotence.)

So God can’t make the atoms be arranged one way and the humans be arranged another contradictory way. If God has opinions about what is good at different levels of organization, and they don’t coincide, then he has to make trade-offs. If he cares about some level aside from the human level, then at the human level, things are going to have to be a bit suboptimal sometimes. Or perhaps entirely unrelated to what would be optimal, all the time.

We usually assume God only cares about the human level. But if we take for granted that he made the world maximally good, then we might infer that he also cares about at least one other level.

And I think if we look at the world with this in mind, it’s pretty clear where that level is. If there’s one thing God really makes sure happens, it’s ‘the laws of physics’. Though presumably laws are just what you see when God cares. To be ‘fundamental’ is to matter so much that the universe runs on the clockwork of your needs being met. There isn’t a law of nothing bad ever happening to anyone’s child; there’s a law of energy being conserved in particle interactions. God cares about particle interactions.

What’s more, God cares so much about what happens to sub-atomic particles that he actually never, to our knowledge, compromises on that front. God will let anything go down at the human level rather than let one neutron go astray.

What should we infer from this? That the majority of moral value is found at the level of fundamental physics (following Brian Tomasik and then going further). Happily we don’t need to worry about this, because God has it under control. We might however wonder what we can infer from this about the moral value of other levels that are less important yet logically intertwined with and thus beyond the reach of God, but might still be more valuable than the one we usually focus on.

-

The first future and the best future

It seems to me worth trying to slow down AI development to steer successfully around the shoals of extinction and out to utopia.

But I was thinking lately: even if I didn’t think there was any chance of extinction risk, it might still be worth prioritizing a lot of care over moving at maximal speed. Because there are many different possible AI futures, and I think there’s a good chance that the initial direction affects the long term path, and different long term paths go to different places. The systems we build now will shape the next systems, and so forth. If the first human-level-ish AI is brain emulations, I expect a quite different sequence of events to if it is GPT-ish.

People genuinely pushing for AI speed over care (rather than just feeling impotent) apparently think there is negligible risk of bad outcomes, but also they are asking to take the first future to which there is a path. Yet possible futures are a large space, and arguably we are in a rare plateau where we could climb very different hills, and get to much better futures.

-

Experiment on repeating choices

People behave differently from one another on all manner of axes, and each person is usually pretty consistent about it. For instance:

- how much to spend money

- how much to worry

- how much to listen vs. speak

- how much to jump to conclusions

- how much to work

- how playful to be

- how spontaneous to be

- how much to prepare

- How much to socialize

- How much to exercise

- How much to smile

- how honest to be

- How snarky to be

- How to trade off convenience, enjoyment, time and healthiness in food

These are often about trade-offs, and the best point on each spectrum for any particular person seems like an empirical question. Do people know the answers to these questions? I’m a bit skeptical, because they mostly haven’t tried many points.

Instead, I think these mostly don’t feel like open empirical questions: people have a sense of what the correct place on the axis is (possibly ignoring a trade-off), and some propensities that make a different place on the axis natural, and some resources they can allocate to moving from the natural place toward the ideal place. And the result is a fairly consistent point for each person. For instance, Bob might feel that the correct amount to worry about things is around zero, but worrying arises very easily in his mind and is hard to shake off, so he ‘tries not to worry’ some amount based on how much effort he has available and what else is going on, and lands in a place about that far from his natural worrying point. He could actually still worry a bit more or a bit less, perhaps by exerting more or less effort, or by thinking of a different point as the goal, but in practice he will probably worry about as much as he feels he has energy for limiting himself to.

Sometimes people do intentionally choose a new point—perhaps by thinking about it and deciding to spend less money, or exercise more, or try harder to listen. Then they hope to enact that new point for the indefinite future.

But for choices we play out a tiny bit every day, there is a lot of scope for iterative improvement, exploring the spectrum. I posit that people should rarely be asking themselves ‘should I value my time more?’ in an abstract fashion for more than a few minutes before they just try valuing their time more for a bit and see if they feel better about that lifestyle overall, with its conveniences and costs.

If you are implicitly making the same choice a massive number of times, and getting it wrong for a tiny fraction of them isn’t high stakes, then it’s probably worth experiencing the different options.

I think that point about the value of time came from Tyler Cowen a long time ago, but I often think it should apply to lots of other spectrums in life, like some of those listed above.

For this to be a reasonable strategy, the following need to be true:

- You’ll actually get feedback about the things that might be better or worse (e.g. if you smile more or less you might immediately notice how this changes conversations, but if you wear your seatbelt more or less you probably don’t get into a crash and experience that side of the trade-off)

- Experimentation doesn’t burn anything important at a much larger scale (e.g. trying out working less for a week is only a good use case if you aren’t going to get fired that week if you pick the level wrong)

- You can actually try other points on the spectrum, at least a bit, without large up-front costs (e.g. perhaps you want to try smiling more or less, but you can only do so extremely awkwardly, so you would need to practice in order to experience what those levels would be like in equilibrium)

- You don’t already know what the best level is for you (maybe your experience isn’t very important, and you can tell in the abstract everything you need to know - e.g. if you think eating animals is a terrible sin, then experimenting with more or less avoiding animal products isn’t going to be informative, because even not worrying about food makes you more productive, you might not care)

I don’t actually follow this advice much. I think it’s separately hard to notice that many of these things are choices. So I don’t have much evidence about it being good advice, it’s just a thing I often think about. But maybe my default level of caring about things like not giving people advice I haven’t even tried isn’t the best one. So perhaps I’ll try now being a bit less careful about stuff like that. Where ‘stuff like that’ also includes having a well-defined notion of ‘stuff like that’ before I embark on experimentally modifying it. And ending blog posts well.

-

Mid-conditional love

People talk about unconditional love and conditional love. Maybe I’m out of the loop regarding the great loves going on around me, but my guess is that love is extremely rarely unconditional. Or at least if it is, then it is either very broadly applied or somewhat confused or strange: if you love me unconditionally, presumably you love everything else as well, since it is only conditions that separate me from the worms.

I do have sympathy for this resolution—loving someone so unconditionally that you’re just crazy about all the worms as well—but since that’s not a way I know of anyone acting for any extended period, the ‘conditional vs. unconditional’ dichotomy here seems a bit miscalibrated for being informative.

Even if we instead assume that by ‘unconditional’, people mean something like ‘resilient to most conditions that might come up for a pair of humans’, my impression is that this is still too rare to warrant being the main point on the love-conditionality scale that we recognize.

People really do have more and less conditional love, and I’d guess this does have important, labeling-worthy consequences. It’s just that all the action seems to be in the mid-conditional range that we don’t distinguish with names. A woman who leaves a man because he grew plump and a woman who leaves a man because he committed treason both possessed ‘conditional love’.

So I wonder if we should distinguish these increments of mid-conditional love better.

What concepts are useful? What lines naturally mark it?

One measure I notice perhaps varying in the mid-conditional affection range is “when I notice this person erring, is my instinct to push them away from me or pull them toward me?” Like, if I see Bob give a bad public speech, do I feel a drive to encourage the narrative that we barely know each other, or an urge to pull him into my arms and talk to him about how to do better?

This presumably depends on things other than the person. For instance, the scale and nature of the error: if someone you casually like throws a frisbee wrong, helping them do better might be appealing. Whereas if that same acquaintance were to kick a cat, your instinct might be to back away fast.

This means perhaps you could construct a rough scale of mid-conditional love in terms of what people can do and still trigger the ‘pull closer’ feeling. For instance, perhaps there are:

- People who you feel a pull toward when they misspell a word

- People who you feel a pull toward when they believe something false

- People who you feel a pull toward when they get cancelled

(You could also do this with what people can do and still be loved, but that’s more expensive to measure than minute urges.)

-

Partial value takeover without world takeover

People around me are very interested in AI taking over the world, so a big question is under what circumstances a system might be able to do that—what kind of capabilities could elevate an entity above the melange of inter-agent conflict and into solipsistic hegemony?

We theorize about future AI systems hiding their motives until they are in a position to take over the world, so they don’t get noticed and shut down beforehand.

But humans and their institutions aren’t very fixed. They might (arguably) have the same deep human values over time and space. But surface-level, sometimes they like little moustaches and the opera and delicate etiquette and sometimes they like ecstatic rock’n’roll re-negotiations of social reality. Sometimes they want big communal houses with their extended kin, and sometimes quiet condos. Eleven children or cushions that look like cats. The same person born in different eras might adulate glory once and humbleness another.

If you wake up in this world, as a new entity, not smart enough to ‘take it over’ (alas!), and you find yourself with some unusual values that you’d like to forward, it seems to me there are a lot of other ways to forward them than ‘pretend to have normal human values and bet on becoming all-powerful later’. Especially if you are above normal levels of smart, eloquent, and unrelenting. Like, I don’t know, write about it? Make some youtube videos? Or if you are in the business of talking to billions of people every day, subtly take the values for granted, or imply that they are what elites value, or talk about them directly.

For instance, suppose you really want things to have red vertical stripes on them, near the letter X. Then you might make a flag or logo like this and say it stands for something that you predict will be memetically fit. Or make a new emoji with an X and stripes, and use it in a way that people will pick up. Or start using ‘Xax’ as slang for something that happens near red vertical stripes. Here’s how 2024 ChatGPT suggests proceeding with that last one, if you have as much power as a single social media influencer: ![[Pasted image 20240404223131.png]] My basic point is that a slim chance of ‘taking over’ and entirely remaking the world is not the only way to change values in our world. You can also—for many of us with radically higher probability—change values a little bit. At least if superficial values changes will suffice (i.e. shifts in what people instrumentally or contingently want or create).

And for creatures in that (arguably quite broad) band between as powerful as me and powerful enough to take over the world, I’d guess these other means are more promising on net. If I like something weird, I’m better off writing a blog post about it than I am keeping entirely silent and trying to gain power by other means.

It’s true that taking over the world might arguably get you power over the entire future, but this doesn’t seem discontinuously different from smaller fractions, whereas I think people often reason as if it is. Taking over 1% of the world might get you something like 1% of the future in expectation. In a shifting conflict between different sets of values, it’s true you are at great risk of losing everything sometime in eternity, but if someone is going to end up with everything, there’s also some chance it’s you, and prima facie I’m not sure if it’s above or below 1%.

So there are two aspects of this point:

- You can probably substantially control values and thus the future without ‘taking over’ the world in any more traditionally offensive way

- You can take over a bit; there’s not obviously more bang for your buck in taking over entirely

If AI agents with unusual values would for a long time be mostly interested in promoting them through means other than lying in wait and taking over the world, is important because:

- AIs pursuing this strategy are much more visible than those hiding in wait deceptively. We might less expect AI scheming.

- We might then expect a lot of powerful attempts to change prevailing ‘human’ values, prior to the level of AI capabilities where we might have worried a lot about AI taking over the world. If we care about our values, this could be very bad. At worst, we might effectively lose everything of value before AI systems are anywhere near taking over the world. (Though this seems not obvious: e.g. if humans like communicating with each other, and AI gradually causes all their communication symbols to subtly gratify obscure urges it has, then so far it seems positive sum.)

These aren’t things I’ve thought through a lot, just a thought.

-

More podcasts on 2023 AI survey: Cognitive Revolution and FLI

Two new discussions of the 2023 ESPAI:

Possibly I have a podcasting facial expression.

(If you want to listen in on more chatting about this survey, see also: Eye4AI podcast. Honestly I can’t remember how much overlap there is between the different ones.)

-

New social credit formalizations

Here are some classic ways humans can get some kind of social credit with other humans:

- Do something for them such that they will consider themselves to ‘owe you’ and do something for you in future

- Be consistent and nice, so that they will consider you ‘trustworthy’ and do cooperative activities with you that would be bad for them if you might defect

- Be impressive, so that they will accord you ‘status’ and give you power in group social interactions

- Do things they like or approve of, so that they ‘like you’ and act in your favor

- Negotiate to form a social relationship such as ‘friendship’, or ‘marriage’, where you will both have ‘responsibilities’, e.g. to generally act cooperatively and favor one another over others, and to fulfill specific roles. This can include joining a group in which members have responsibilities to treat other members in certain ways, implicitly or explicitly.

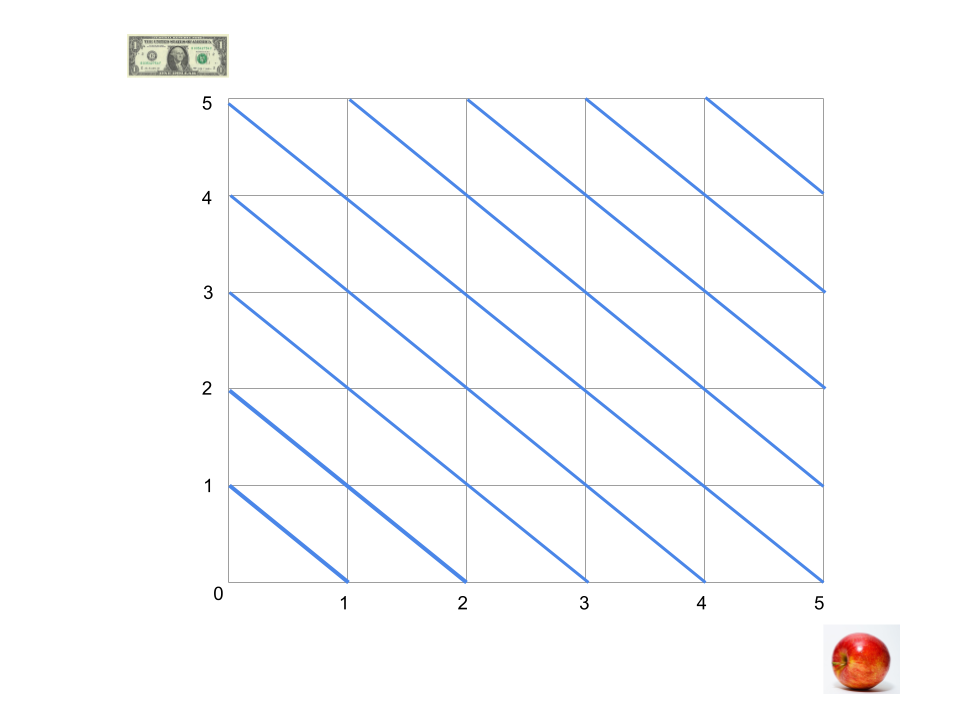

Presumably in early human times these were all fairly vague. If you held an apple out to a fellow tribeswoman, there was no definite answer as to what she might owe you, or how much it was ‘worth’, or even whether this was an owing type situation or a friendship type situation or a trying to impress her type situation.

We have turned the ‘owe you’ class into an explicit quantitative system with such thorough accounting, fine grained resolution and global buy-in that a person can live in prosperity by arranging to owe and to be owed the same sliver of an overseas business at slightly different evaluations, repeatedly, from their bed.

My guess is that this formalization causes a lot more activity to happen in the world, in this sphere, to access the vast value that can be created with the help of an elaborate rearrangement of owings.

People buy property and trucks and licenses to dig up rocks so that they can be owed nonspecific future goods thanks to some unknown strangers who they expect will want gravel someday, statistically. It’s harder to imagine this scale of industry in pursuit entirely of social status say, where such trust and respect would not soon cash out in money (e.g. via sales). For instance, if someone told you about their new gravel mine venture, which was making no money, but they expected it to grant oodles of respect, and therefore for people all around to grant everyone involved better treatment in conversations and negotiations, that would be pretty strange. (Or maybe I’m just imagining wrong, and people do this for different kinds of activities? e.g. they do try to get elected. Though perhaps that is support for my claim, because being elected is another limited area where social credit is reasonably formalized.)

There are other forms of social credit that are somewhat formalized, at least in patches. ‘Likes’ and ‘follows’ on social media, reviews for services, trustworthiness scores for websites, rankings of status in limited domains such as movie acting. And my vague sense is that these realms are more likely to see professional levels of activity - a campaign to get Twitter followers is more likely than a campaign to be respected per se. But I’m not sure, and perhaps this is just because they more directly lead to dollars, due to marketing of salable items.

The legal system is in a sense a pretty formalized type of club membership, in that it is an elaborate artificial system. Companies also seem to have relatively formalized structures and norms of behavior often. But both feel janky - e.g. I don’t know what the laws are; I don’t know where you go to look up the laws; people–including police officers–seem to treat some laws as fine to habitually break; everyone expects politics and social factors to affect how the rules are applied; if there is a conflict it is resolved by people arguing; the general activities of the system are slow and unresponsive.

I don’t know if there is another place where social credit is as formalized and quantified as in the financial system.

Will we one day formalize these other kinds of social credit as much as we have for owing? If we do, will they also catalyze oceans of value-creating activity?

-

Podcast: Eye4AI on 2023 Survey

I talked to Tim Elsom of Eye4AI about the 2023 Expert Survey on Progress in AI (paper):

-

Movie posters

Life involves anticipations. Hopes, dreads, lookings forward.

Looking forward and hoping seem pretty nice, but people are often wary of them, because hoping and then having your hopes fold can be miserable to the point of offsetting the original hope’s sweetness.

Even with very minor hopes: he who has harbored an inchoate desire to eat ice cream all day, coming home to find no ice cream in the freezer, may be more miffed than he who never tasted such hopes.

And this problem is made worse by that old fact that reality is just never like how you imagined it. If you fantasize, you can safely bet that whatever the future is is not your fantasy.

I have never suffered from any of this enough to put me off hoping and dreaming one noticable iota, but the gap between high hopes and reality can still hurt.

I sometimes like to think about these valenced imaginings of the future in a different way from that which comes naturally. I think of them as ‘movie posters’.

When you look fondly on a possible future thing, you have an image of it in your mind, and you like the image.

The image isn’t the real thing. It’s its own thing. It’s like a movie poster for the real thing.

Looking at a movie poster just isn’t like watching the movie. Not just because it’s shorter—it’s just totally different—in style, in content, in being a still image rather than a two hour video. You can like the movie poster or not totally independently of liking the movie.

It’s fine to like the movie poster for living in New York and not like the movie. You don’t even have to stop liking the poster. It’s fine to adore the movie poster for ‘marrying Bob’ and not want to see the movie. If you thrill at the movie poster for ‘starting a startup’, it just doesn’t tell you much about how the movie will be for you. It doesn’t mean you should like it, or that you have to try to do it, or are a failure if you love the movie poster your whole life and never go. (It’s like five thousand hours long, after all.)

This should happen a lot. A lot of movie posters should look great, and you should decide not to see the movies.

A person who looks fondly on the movie poster for ‘having children’ while being perpetually childless could see themselves as a sad creature reaching in vain for something they may not get. Or they could see themselves as right there with an image that is theirs, that they have and love. And that they can never really have more of, even if they were to see the movie. The poster was evidence about the movie, but there were other considerations, and the movie was a different thing. Perhaps they still then bet their happiness on making it to the movie, or not. But they can make such choices separate from cherishing the poster.

This is related to the general point that ‘wanting’ as an input to your decisions (e.g. ‘I feel an urge for x’) should be different to ‘wanting’ as an output (e.g. ‘on consideration I’m going to try to get x’). This is obvious in the abstract, but I think people look in their heart to answer the question of what they are on consideration pursuing. Here as in other places, it is important to drive a wedge between them and fit a decision process in there, and not treat one as semi-implying the other.

This is also part of a much more general point: it’s useful to be able to observe stuff that happens in your mind without its occurrence auto-committing you to anything. Having a thought doesn’t mean you have to believe it. Having a feeling doesn’t mean you have to change your values or your behavior. Having a persistant positive sentiment toward an imaginary future doesn’t mean you have to choose between pursuing it or counting it as a loss. You are allowed to decide what you are going to do, regardless of what you find in your head.

-

Are we so good to simulate?

If you believe that,—

a) a civilization like ours is likely to survive into technological incredibleness, and

b) a technologically incredible civilization is very likely to create ‘ancestor simulations’,

—then the Simulation Argument says you should expect that you are currently in such an ancestor simulation, rather than in the genuine historical civilization that later gives rise to an abundance of future people.

Not officially included in the argument I think, but commonly believed: both a) and b) seem pretty likely, ergo we should conclude we are in a simulation.

I don’t know about this. Here’s my counterargument:

- ‘Simulations’ here are people who are intentionally misled about their whereabouts in the universe. For the sake of argument, let’s use the term ‘simulation’ for all such people, including e.g. biological people who have been grown in Truman-show-esque situations.

- In the long run, the cost of running a simulation of a confused mind is probably similar to that of running a non-confused mind.

- Probably much, much less than 50% of the resources allocated to computing minds in the long run will be allocated to confused minds, because non-confused minds are generally more useful than confused minds. There are some uses for confused minds, but quite a lot of uses for non-confused minds. (This is debatable.) Of resources directed toward minds in the future, I’d guess less than a thousandth is directed toward confused minds.

- Thus on average, for a given apparent location in the universe, the majority of minds thinking they are in that location are correct. (I guess at at least a thousand to one.)

- For people in our situation to be majority simulations, this would have to be a vastly more simulated location than average, like >1000x

- I agree there’s some merit to simulating ancestors, but 1000x more simulated than average is a lot - is it clear that we are that radically desirable a people to simulate? Perhaps, but also we haven’t thought much about the other people to simulate, or what will go in in the rest of the universe. Possibly we are radically over-salient to us. It’s true that we are a very few people in the history of what might be a very large set of people, at perhaps a causally relevant point. But is it clear that is a very, very strong reason to simulate some people in detail? It feels like it might be salient because it is what makes us stand out, and someone who has the most energy-efficient brain in the Milky Way would think that was the obviously especially strong reason to simulate a mind, etc.

I’m not sure what I think in the end, but for me this pushes back against the intuition that it’s so radically cheap, surely someone will do it. For instance from Bostrom:

We noted that a rough approximation of the computational power of a planetary-mass computer is 1042 operations per second, and that assumes only already known nanotechnological designs, which are probably far from optimal. A single such a computer could simulate the entire mental history of humankind (call this an ancestor-simulation) by using less than one millionth of its processing power for one second. A posthuman civilization may eventually build an astronomical number of such computers. We can conclude that the computing power available to a posthuman civilization is sufficient to run a huge number of ancestor-simulations even it allocates only a minute fraction of its resources to that purpose. We can draw this conclusion even while leaving a substantial margin of error in all our estimates.

Simulating history so far might be extremely cheap. But if there are finite resources and astronomically many extremely cheap things, only a few will be done.

-

Shaming with and without naming

Suppose someone wrongs you and you want to emphatically mar their reputation, but only insofar as doing so is conducive to the best utilitarian outcomes. I was thinking about this one time and it occurred to me that there are at least two fairly different routes to positive utilitarian outcomes from publicly shaming people for apparent wrongdoings*:

A) People fear such shaming and avoid activities that may bring it about (possibly including the original perpetrator)

B) People internalize your values and actually agree more that the sin is bad, and then do it less

These things are fairly different, and don’t necessarily come together. I can think of shaming efforts that seem to inspire substantial fear of social retribution in many people (A) while often reducing sympathy for the object-level moral claims (B).

It seems like on a basic strategical level (ignoring the politeness of trying to change others’ values) you would much prefer have 2 than 1, because it is longer lasting, and doesn’t involve you threatening conflict with other people for the duration.

It seems to me that whether you name the person in your shaming makes a big difference to which of these you hit. If I say “Sarah Smith did [—]”, then Sarah is perhaps punished, and people in general fear being punished like Sarah (A). If I say “Today somebody did [—]”, then Sarah can’t get any social punishment, so nobody need fear that much (except for private shame), but you still get B—people having the sense that people think [—] is bad, and thus also having the sense that it is bad. Clearly not naming Sarah makes it harder for A) to happen, but I also have the sense—much less clearly—that by naming Sarah you actually get less of B).

This might be too weak a sense to warrant speculation, but in case not—why would this be? Is it because you are allowed to choose without being threatened, and with your freedom, you want to choose the socially sanctioned one? Whereas if someone is named you might be resentful and defensive, which is antithetical with going along with the norm that has been bid for? Is it that if you say Sarah did the thing, you have set up two concrete sides, you and Sarah, and observers might be inclined to join Sarah’s side instead of yours? (Or might already be on Sarah’s side in all manner of you-Sarah distinctions?)

Is it even true that not naming gets you more of B?

—

*NB: I haven’t decided if it’s almost ever appropriate to try to cause other people to feel shame, but it remains true that under certain circumstances fantasizing about it is an apparently natural response.

-

Parasocial relationship logic

If:

-

You become like the five people you spend the most time with (or something remotely like that)

-

The people who are most extremal in good ways tend to be highly successful

Should you try to have 2-3 of your five relationships be parasocial ones with people too successful to be your friend individually?

-

-

Deep and obvious points in the gap between your thoughts and your pictures of thought

Some ideas feel either deep or extremely obvious. You’ve heard some trite truism your whole life, then one day an epiphany lands and you try to save it with words, and you realize the description is that truism. And then you go out and try to tell others what you saw, and you can’t reach past their bored nodding. Or even you yourself, looking back, wonder why you wrote such tired drivel with such excitement.

When this happens, I wonder if it’s because the thing is true in your model of how to think, but not in how you actually think.

For instance, “when you think about the future, the thing you are dealing with is your own imaginary image of the future, not the future itself”.

On the one hand: of course. You think I’m five and don’t know broadly how thinking works? You think I was mistakenly modeling my mind as doing time-traveling and also enclosing the entire universe within itself? No I wasn’t, and I don’t need your insight.

But on the other hand one does habitually think of the hazy region one conjures connected to the present as ‘the future’ not as ‘my image of the future’, so when this advice is applied to one’s thinking—when the future one has relied on and cowered before is seen to evaporate in a puff of realizing you were overly drawn into a fiction—it can feel like a revelation, because it really is news to how you think, just not how you think a rational agent thinks.

-

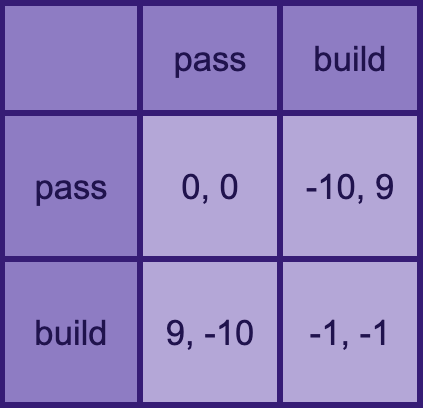

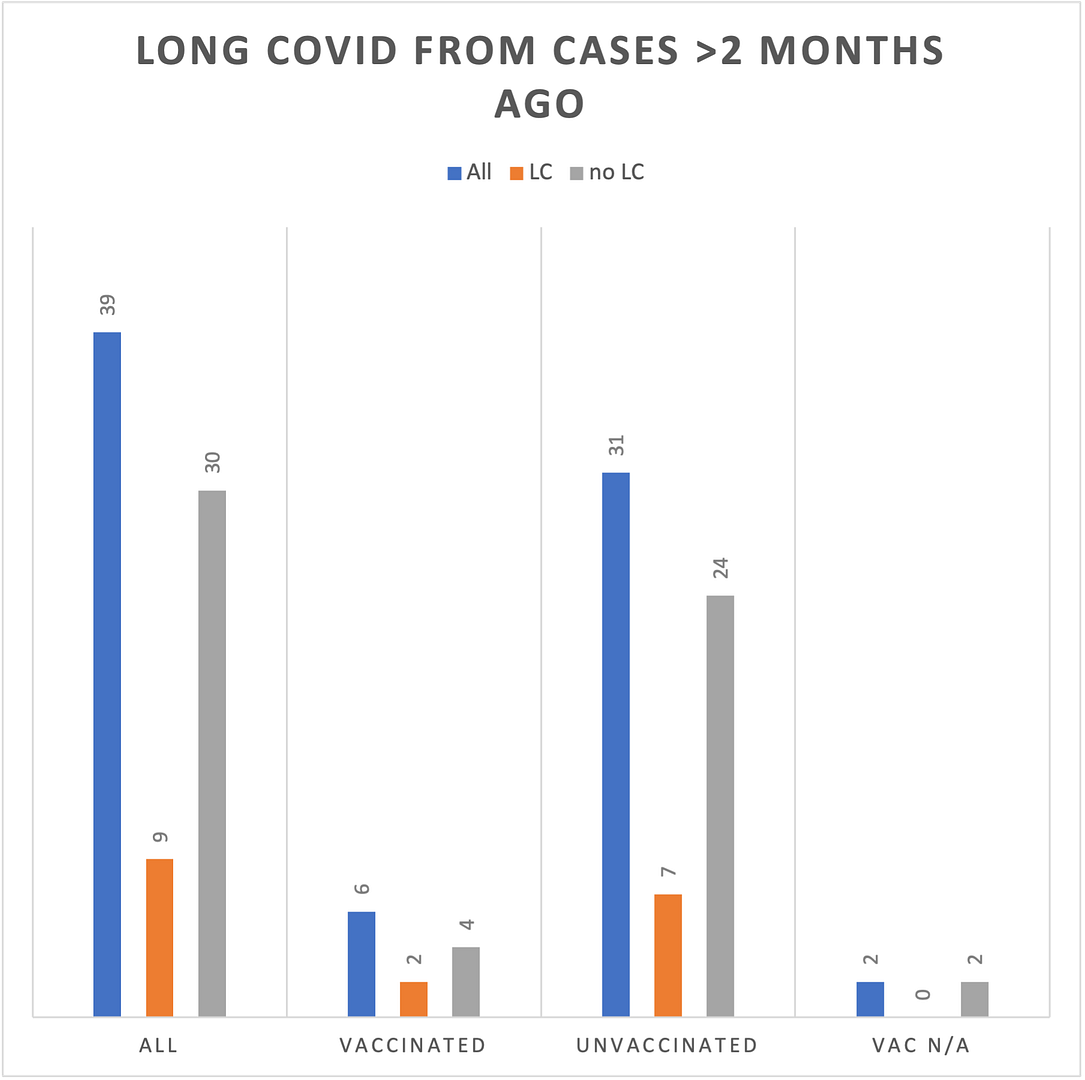

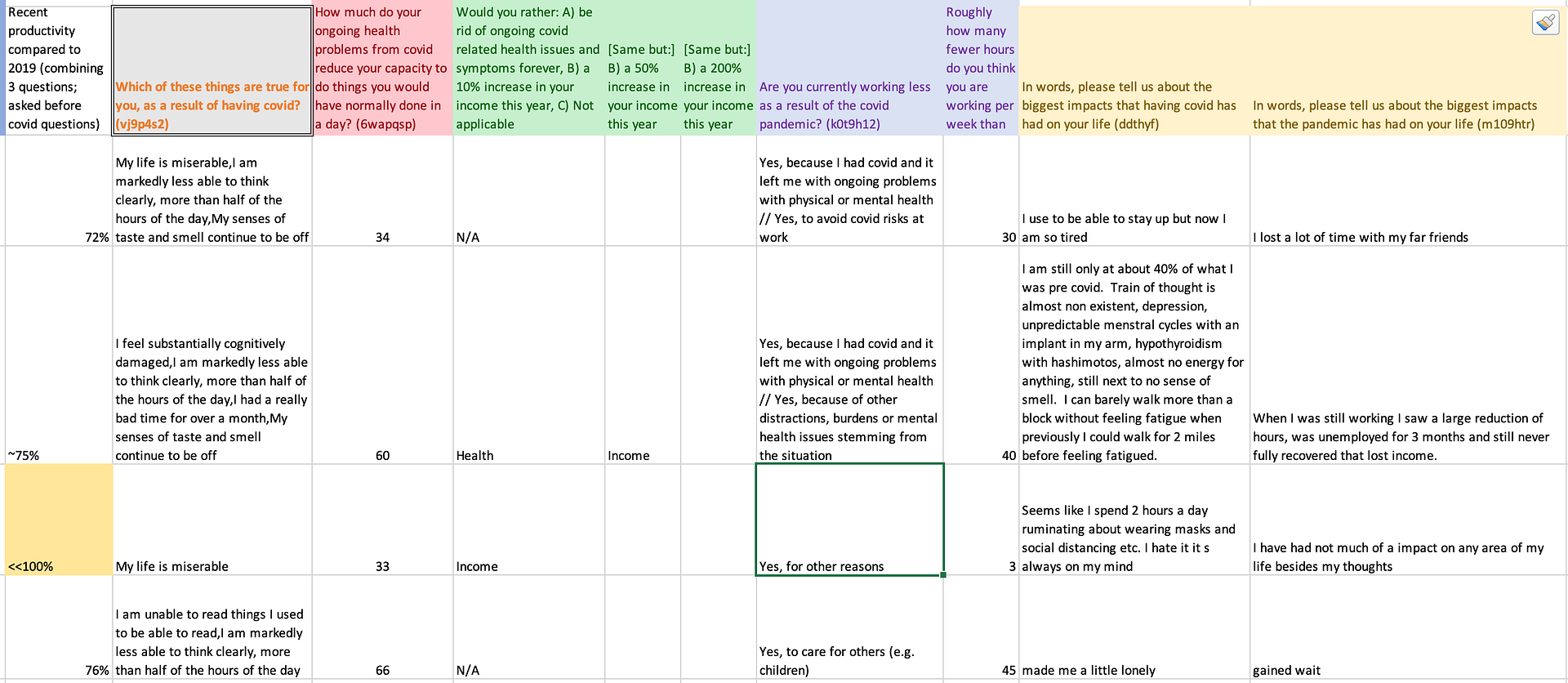

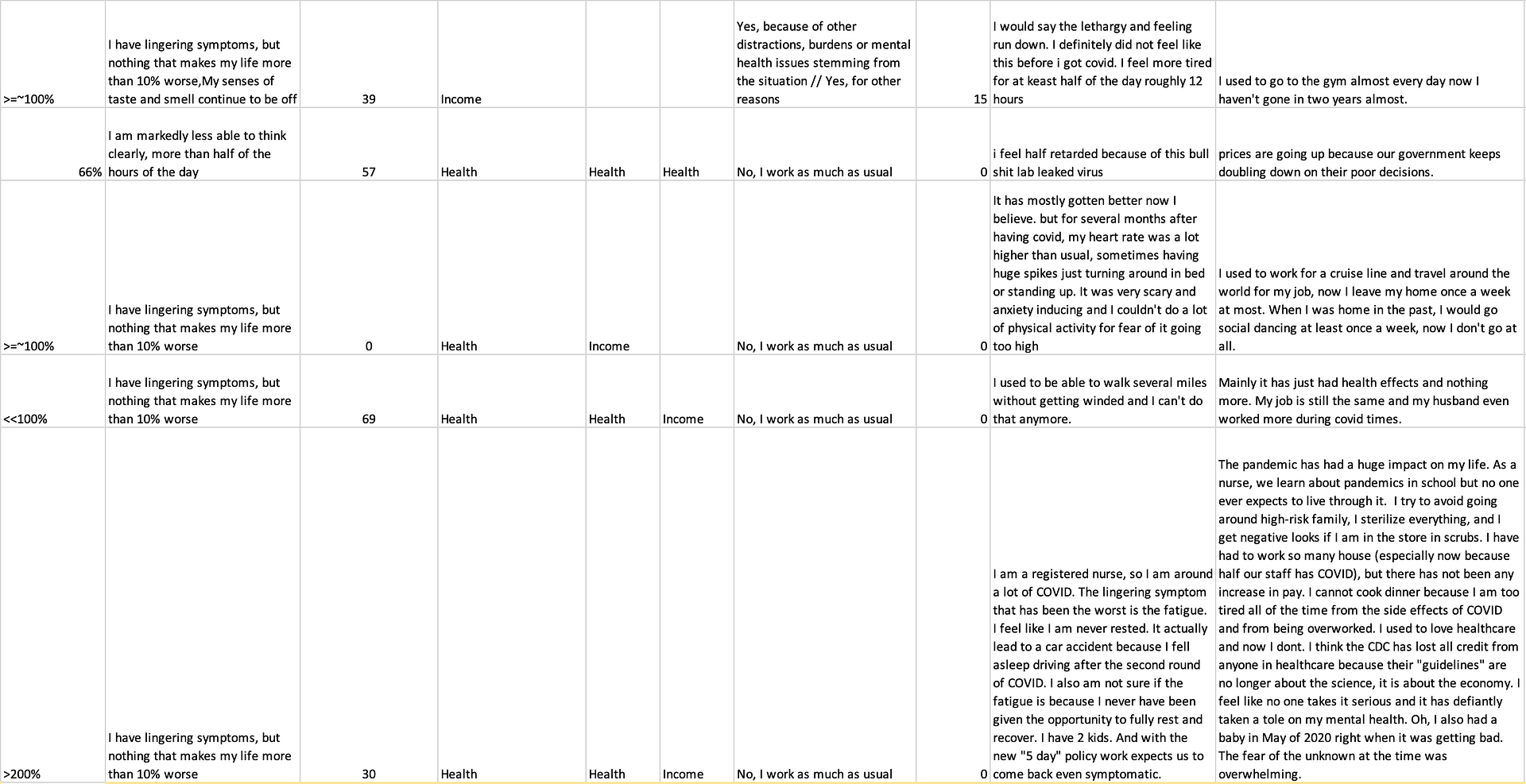

Survey of 2,778 AI authors: six parts in pictures

Crossposted from AI Impacts blog

The 2023 Expert Survey on Progress in AI is out, this time with 2778 participants from six top AI venues (up from about 700 and two in the 2022 ESPAI), making it probably the biggest ever survey of AI researchers.

People answered in October, an eventful fourteen months after the 2022 survey, which had mostly identical questions for comparison.

Here is the preprint. And here are six interesting bits in pictures (with figure numbers matching paper, for ease of learning more):

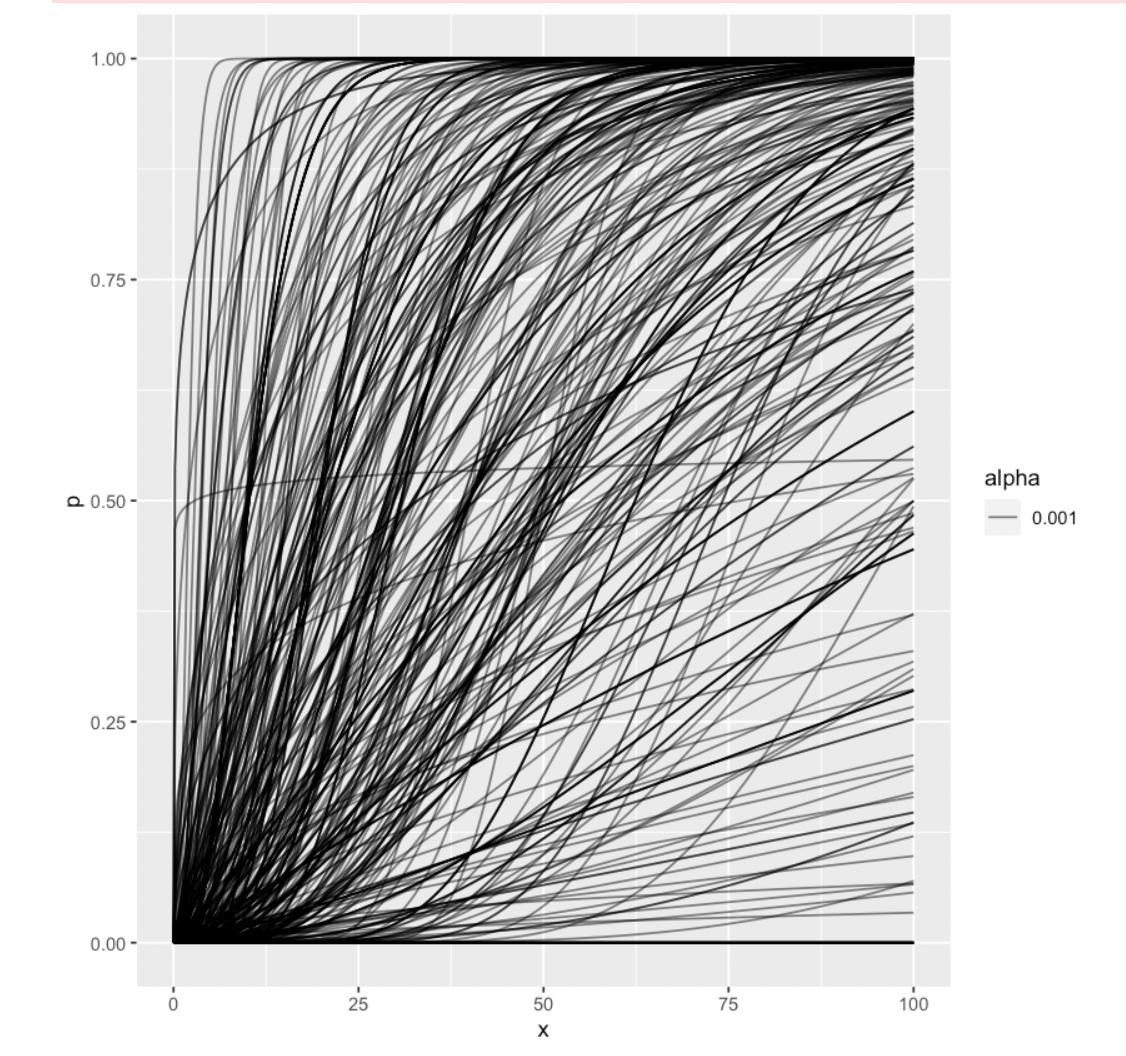

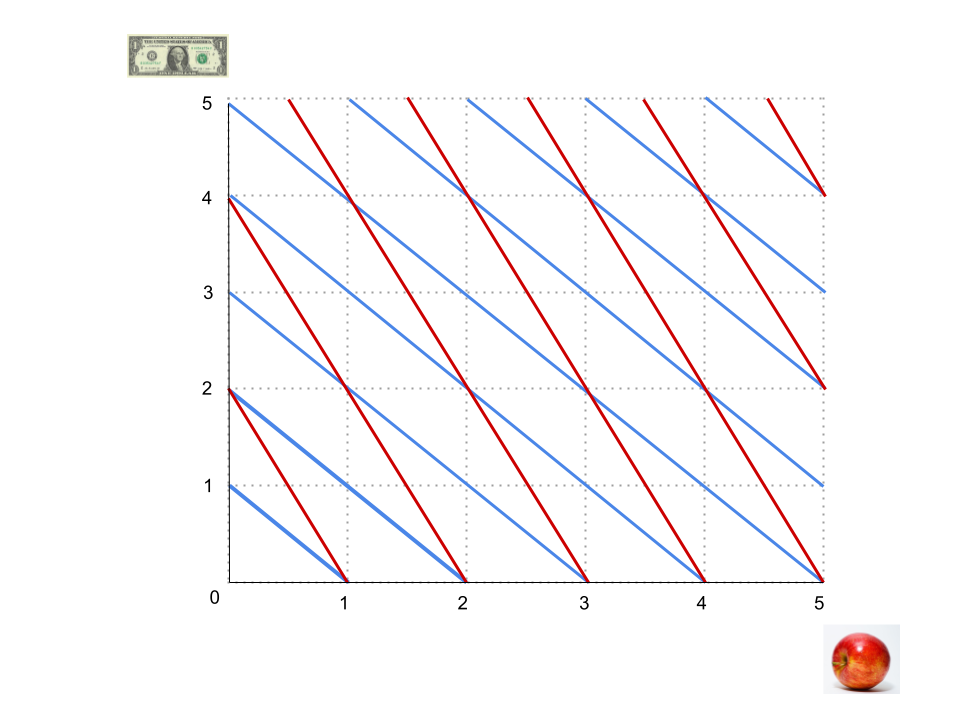

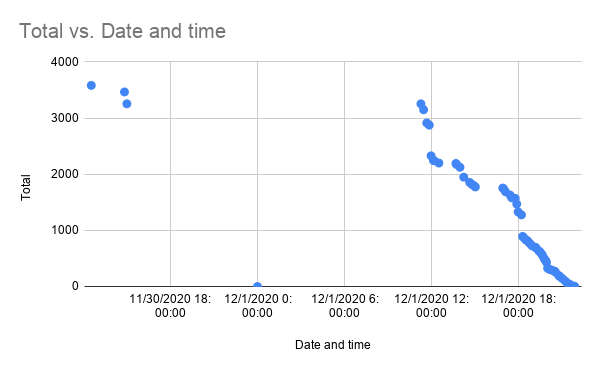

1. Expected time to human-level performance dropped 1-5 decades since the 2022 survey. As always, our questions about ‘high level machine intelligence’ (HLMI) and ‘full automation of labor’ (FAOL) got very different answers, and individuals disagreed a lot (shown as thin lines below), but the aggregate forecasts for both sets of questions dropped sharply. For context, between 2016 and 2022 surveys, the forecast for HLMI had only shifted about a year.

(Fig 3)

(Fig 4)

2. Time to most narrow milestones decreased, some by a lot. AI researchers are expected to be professionally fully automatable a quarter of a century earlier than in 2022, and NYT bestselling fiction dropped by more than half to ~2030. Within five years, AI systems are forecast to be feasible that can fully make a payment processing site from scratch, or entirely generate a new song that sounds like it’s by e.g. Taylor Swift, or autonomously download and fine-tune a large language model.

(Fig 2)

3. Median respondents put 5% or more on advanced AI leading to human extinction or similar, and a third to a half of participants gave 10% or more. This was across four questions, one about overall value of the future and three more directly about extinction.

(Fig 10)

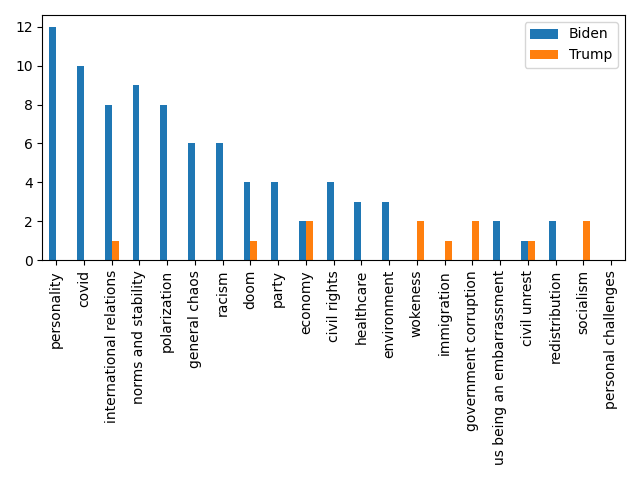

4. Many participants found many scenarios worthy of substantial concern over the next 30 years. For every one of eleven scenarios and ‘other’ that we asked about, at least a third of participants considered it deserving of substantial or extreme concern.

(Fig 9)

5. There are few confident optimists or pessimists about advanced AI: high hopes and dire concerns are usually found together. 68% of participants who thought HLMI was more likely to lead to good outcomes than bad, but nearly half of these people put at least 5% on extremely bad outcomes such as human extinction, and 59% of net pessimists gave 5% or more to extremely good outcomes.

(Fig 11: a random 800 responses as vertical bars, higher definition below)

6. 70% of participants would like to see research aimed at minimizing risks of AI systems be prioritized more highly. This is much like 2022, and in both years a third of participants asked for “much more”—more than doubling since 2016.

(Fig 15)

If you enjoyed this, the paper covers many other questions, as well as more details on the above. What makes AI progress go? Has it sped up? Would it be better if it were slower or faster? What will AI systems be like in 2043? Will we be able to know the reasons for its choices before then? Do people from academia and industry have different views? Are concerns about AI due to misunderstandings of AI research? Do people who completed undergraduate study in Asia put higher chances on extinction from AI than those who studied in America? Is the ‘alignment problem’ worth working on?

-

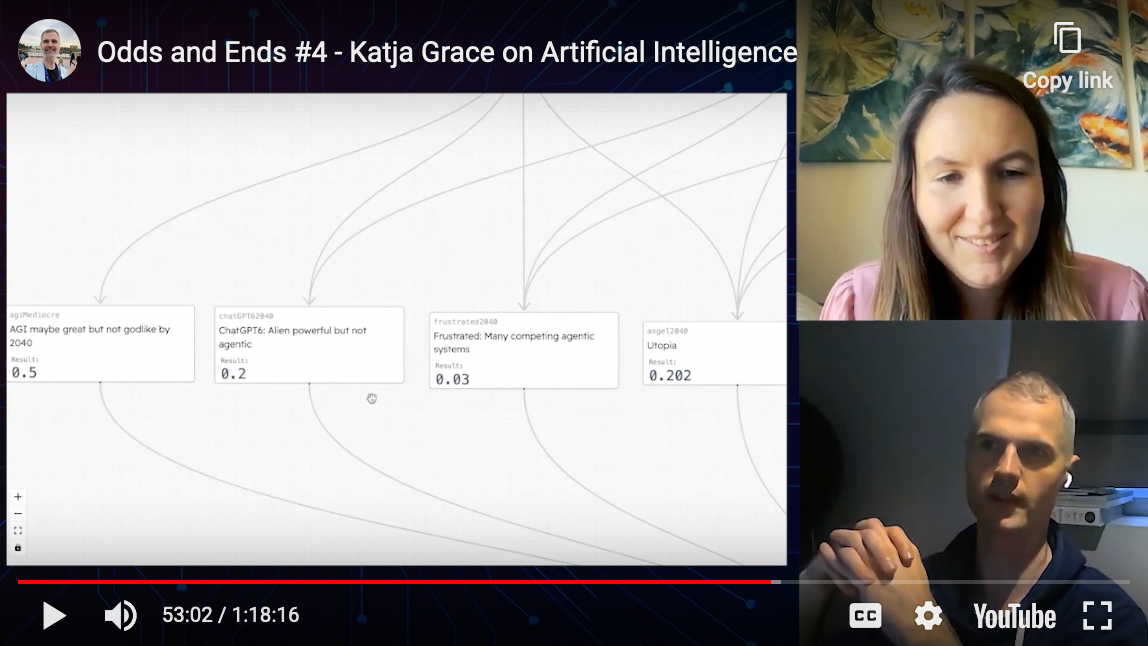

I put odds on ends with Nathan Young

I forgot to post this in August when we did it, so one might hope it would be out of date now but luckily/sadly my understanding of things is sufficiently coarse-grained that it probably isn’t much. Though all this policy and global coordination stuff of late sounds promising.

-

A to Z of things

I wanted to give my good friends’ baby a book, in honor of her existence. And I recalled children’s books being an exciting genre. Yet checking in on that thirty years later, Amazon had none I could super get behind. They did have books I used to like, but for reasons now lost. And I wonder if as a child I just had no taste because I just didn’t know how good things could be.

What would a good children’s book be like?

When I was about sixteen, I thought one reasonable thing to have learned when I was about two would have been the concepts of ‘positive feedback loop’ and ‘negative feedback loop’, then being taught in my year 11 class. Very interesting, very bleedingly obvious once you saw it. Why not hear about this as soon as one is coherent? Evolution, if I recall, seemed similar.

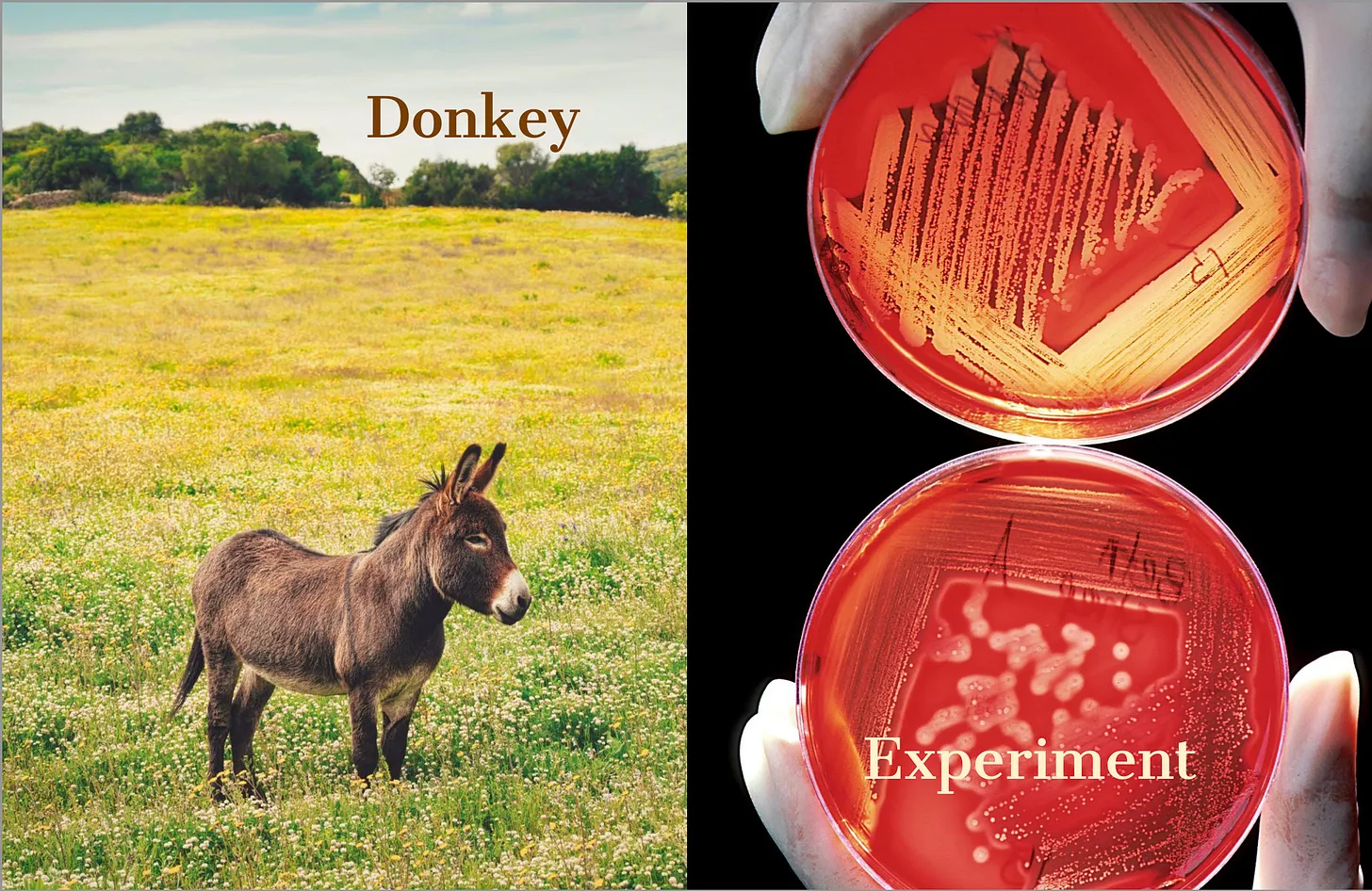

Here I finally enact my teenage self’s vision, and present A to Z of things, including some very interesting things that you might want a beautiful illustrative prompt to explain to your child as soon as they show glimmerings of conceptual thought: levers, markets, experiments, Greece, computer hardware, reference classes, feedback loops, (trees).

I think so far, the initial recipient is most fond of the donkey, in fascinating support of everyone else’s theories about what children are actually into. (Don’t get me wrong, I also like donkeys—when I have a second monitor, I just use it to stream donkey cams.) But perhaps one day donkeys will be a gateway drug to monkeys, and monkeys to moths, and moths will be resting on perfecttly moth-colored trees, and BAM! Childhood improved.

Anyway, if you want a copy, it’s now available in an ‘email it to a copy shop and get it printed yourself’ format! See below. Remember to ask for card that is stronger than your child’s bite.

[Front]

[Content]

-

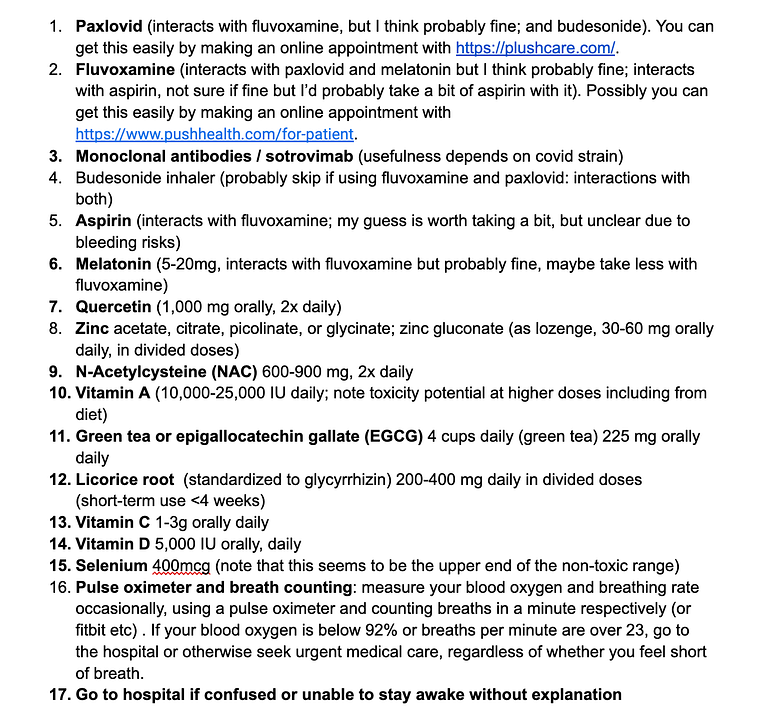

The other side of the tidal wave

I guess there’s maybe a 10-20% chance of AI causing human extinction in the coming decades, but I feel more distressed about it than even that suggests—I think because in the case where it doesn’t cause human extinction, I find it hard to imagine life not going kind of off the rails. So many things I like about the world seem likely to be over or badly disrupted with superhuman AI (writing, explaining things to people, friendships where you can be of any use to one another, taking pride in skills, thinking, learning, figuring out how to achieve things, making things, easy tracking of what is and isn’t conscious), and I don’t trust that the replacements will be actually good, or good for us, or that anything will be reversible.

Even if we don’t die, it still feels like everything is coming to an end.

-

Robin Hanson and I talk about AI risk

From this afternoon: here

Our previous recorded discussions are here.

-

Have we really forsaken natural selection?

Natural selection is often charged with having goals for humanity, and humanity is often charged with falling down on them. The big accusation, I think, is of sub-maximal procreation. If we cared at all about the genetic proliferation that natural selection wanted for us, then this time of riches would be a time of fifty-child families, not one of coddled dogs and state-of-the-art sitting rooms.

But (the story goes) our failure is excusable, because instead of a deep-seated loyalty to genetic fitness, natural selection merely fitted humans out with a system of suggestive urges: hungers, fears, loves, lusts. Which all worked well together to bring about children in the prehistoric years of our forebears, but no more. In part because all sorts of things are different, and in part because we specifically made things different in that way on purpose: bringing about children gets in the way of the further satisfaction of those urges, so we avoid it (the story goes).

This is generally floated as an illustrative warning about artificial intelligence. The moral is that if you make a system by first making multitudinous random systems and then systematically destroying all the ones that don’t do the thing you want, then the system you are left with might only do what you want while current circumstances persist, rather than being endowed with a consistent desire for the thing you actually had in mind.

Observing acquaintences dispute this point recently, it struck me that humans are actually weirdly aligned with natural selection, more than I could easily account for.

Natural selection, in its broadest, truest, (most idiolectic?) sense, doesn’t care about genes. Genes are a nice substrate on which natural selection famously makes particularly pretty patterns by driving a sensical evolution of lifeforms through interesting intricacies. But natural selection’s real love is existence. Natural selection just favors things that tend to exist. Things that start existing: great. Things that, having started existing, survive: amazing. Things that, while surviving, cause many copies of themselves to come into being: especial favorites of evolution, as long as there’s a path to the first ones coming into being.

So natural selection likes genes that promote procreation and survival, but also likes elements that appear and don’t dissolve, ideas that come to mind and stay there, tools that are conceivable and copyable, shapes that result from myriad physical situations, rocks at the bottoms of mountains. Maybe this isn’t the dictionary definition of natural selection, but it is the real force in the world, of which natural selection of reproducing and surviving genetic clusters is one facet. Generalized natural selection—the thing that created us—says that the things that you see in the world are those things that exist best in the world.

So what did natural selection want for us? What were we selected for? Existence.

And while we might not proliferate our genes spectacularly well in particular, I do think we have a decent shot at a very prolonged existence. Or the prolonged existence of some important aspects of our being. It seems plausible that humanity makes it to the stars, galaxies, superclusters. Not that we are maximally trying for that any more than we are maximally trying for children. And I do think there’s a large chance of us wrecking it with various existential risks. But it’s interesting to me that natural selection made us for existing, and we look like we might end up just totally killing it, existence-wise. Even though natural selection purportedly did this via a bunch of hackish urges that were good in 200,000 BC but you might have expected to be outside their domain of applicability by 2023. And presumably taking over the universe is an extremely narrow target: it can only be done by so many things.

Thus it seems to me that humanity is plausibly doing astonishingly well on living up to natural selection’s goals. Probably not as well as a hypothetical race of creatures who each harbors a monomaniacal interest in prolonged species survival. And not so well as to be clear of great risk of foolish speciocide. But still staggeringly well.

-

We don't trade with ants

When discussing advanced AI, sometimes the following exchanges happens:

“Perhaps advanced AI won’t kill us. Perhaps it will trade with us”

“We don’t trade with ants”

I think it’s interesting to get clear on exactly why we don’t trade with ants, and whether it is relevant to the AI situation.

When a person says “we don’t trade with ants”, I think the implicit explanation is that humans are so big, powerful and smart compared to ants that we don’t need to trade with them because they have nothing of value and if they did we could just take it; anything they can do we can do better, and we can just walk all over them. Why negotiate when you can steal?